Future-Proofing AI Governance with an AI Management System (AIMS)

With the rapid adoption of Artificial Intelligence (AI) across industries, organisations face mounting challenges in governing AI ethics, security, risk, and compliance. AI models process large volumes of sensitive data, make automated decisions, and influence human outcomes, necessitating a structured AI Management System (AIMS).

Achieving ISO 42001 certification ensures that your organisation has a robust governance framework to manage AI risks, regulatory compliance, transparency, fairness, and security.

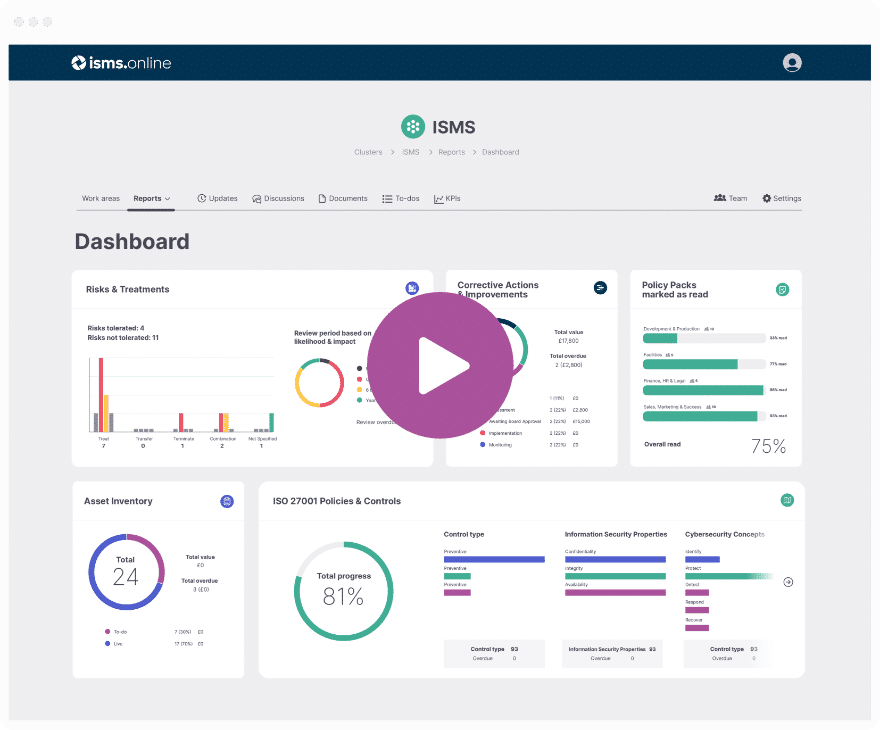

Get an 81% headstart

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

What is ISO 42001?

ISO 42001:2023 is the first AI-specific management standard providing a systematic approach to AI governance. It aligns with other standards like ISO 27001 (Information Security), ISO 27701 (Privacy), GDPR, the EU AI Act, and NIST AI Risk Management Framework (RMF).

By implementing ISO 42001, your organisation will:

✅ Ensure compliance with global AI regulations

✅ Mitigate AI-related risks (bias, security, adversarial threats)

✅ Establish AI transparency and accountability

✅ Improve AI decision explainability & model fairness

✅ Enhance resilience against AI system failures and legal issues

What’s Covered in This Guide?

Despite the benefits, ISO 42001 implementation is a complex, resource-intensive process. This guide will break down each step, addressing AI risk management, governance, compliance, and audits.

Defining the Scope of Your AI Management System (AIMS)

Why Defining Your AIMS Scope Matters

Establishing a clear and well-defined scope is the foundation of an effective AI Management System (AIMS) under ISO 42001:2023. It ensures that your AI models, data sources, decision-making processes, and regulatory obligations are properly governed. Without a clearly documented scope, AI governance efforts can become disorganised, non-compliant, and vulnerable to ethical, legal, and security risks.

By properly defining the AIMS scope, organisations can:

✅ Determine which AI models, applications, and data processes require governance.

✅ Align AI governance with business goals, regulatory requirements, and stakeholder expectations.

✅ Ensure auditors and compliance bodies have a clear understanding of AI governance boundaries.

✅ Mitigate AI-specific risks such as bias, adversarial attacks, privacy violations, and decision opacity.

Implementing ISO 42001 isn't just about compliance; it's a survival manual for AI in a world demanding accountability.

- Chris Newton-Smith, ISMS.Online CEO

1. Establishing the Scope of AIMS (Aligned with ISO 42001 Clauses 4.1 – 4.4)

📌 ISO 42001 Clause 4.1 – Understanding the Organisation and Its Context Before defining the AIMS scope, organisations must assess both internal and external factors that influence AI governance:

- Internal Factors:

- The organisation’s AI strategy, objectives, and risk appetite.

- AI data sources, development frameworks, and deployment environments.

- Cross-functional stakeholders (AI engineers, compliance officers, data privacy teams, risk managers).

- External Factors:

- Regulatory environment (GDPR, EU AI Act, NIST AI RMF, industry-specific AI policies).

- Customer expectations regarding AI fairness, transparency, and security.

- Third-party AI vendors, cloud AI services, and API integrations.

📌 ISO 42001 Clause 4.2 – Understanding the Needs and Expectations of Interested Parties Identify all stakeholders impacted by AI governance:

✅ Internal: AI teams, IT security, compliance, legal teams, executives.

✅ External: Customers, regulators, investors, industry watchdogs, auditors.

✅ Third-Party Vendors: Cloud AI providers, API-based AI services, outsourced AI models.

📌 ISO 42001 Clause 4.3 – Determining the Scope of AIMS To define the scope of AIMS, organisations must:

✅ Identify which AI applications and systems require governance.

✅ Specify AI lifecycle stages covered (development, deployment, monitoring, retirement).

✅ Document interfaces and dependencies (third-party AI tools, external data sources).

✅ Define geographical and regulatory boundaries (AI systems deployed across different jurisdictions).

📌 ISO 42001 Clause 4.4 – AIMS and Its Interactions with Other Systems

✅ Map how AIMS interacts with existing Information Security (ISO 27001) and Privacy Management (ISO 27701) frameworks.

✅ Identify dependencies with IT governance, risk management, and business continuity planning.

2. Key Considerations When Defining Your AIMS Scope

a) AI Models & Decision-Making Processes in Scope

🔹 AI-driven business functions (finance, healthcare, HR, customer support).

🔹 AI decision-making models (risk assessment, credit scoring, automated hiring).

🔹 AI systems using personal or biometric data (facial recognition, voice authentication).

b) AI Lifecycle Coverage

🔹 AI model development & training – Ensuring fairness and non-discriminatory training datasets.

🔹 AI deployment & operations – Securing AI models from adversarial attacks.

🔹 AI monitoring & continuous assessment – Tracking AI drift, bias evolution, and performance.

🔹 AI retirement & decommissioning – Ensuring proper disposal of outdated AI models.

c) Regulatory & Compliance Requirements

🔹 GDPR (AI handling personal data).

🔹 EU AI Act (High-risk AI applications must have explainability).

🔹 NIST AI Risk Management Framework (Mitigating AI risks systematically).

Manage all your compliance in one place

ISMS.online supports over 100 standards

and regulations, giving you a single

platform for all your compliance needs.

3. Documenting Your AIMS Scope for Compliance & Audits

📌 What Must Be Included in Your Scope Document?

AIMS scope documentation should contain:

✅ Scope Statement: Clearly define which AI systems, processes, and decisions are included/excluded.

✅ AI Regulatory Mapping: List relevant laws, frameworks, and industry-specific compliance obligations.

✅ AI Governance Interfaces: Outline how AIMS interacts with IT security, legal, compliance, and ethics teams.

✅ Stakeholder Involvement: Specify the roles and responsibilities of AI governance stakeholders.

📄 Sample AIMS Scope Statement

📍 Company Name: AI Innovations Corp 📍 Scope of AIMS:

“The AI Management System (AIMS) of AI Innovations Corp applies to all AI-driven decision-making models utilised in customer service automation, credit risk assessment, and medical diagnostics within the organisation. The AIMS scope includes the development, deployment, monitoring, and ethical oversight of AI systems, ensuring compliance with ISO 42001, GDPR, and the EU AI Act. AI models sourced from third-party vendors undergo periodic compliance and security assessments, while internal AI systems are governed under strict risk management protocols to prevent bias, security vulnerabilities, and regulatory breaches. AI models used solely for internal data analytics that do not impact external decision-making are excluded from this AIMS scope.”

4. Managing Exclusions from AIMS Scope

Just like ISO 27001, ISO 42001 allows certain AI models, datasets, or decision-making systems to be excluded, provided the exclusions are justified and documented.

✅ Acceptable AIMS Exclusions

✅ AI models used exclusively for internal research purposes.

✅ AI prototypes undergoing early-stage testing without deployment.

✅ AI solutions where no personally identifiable or regulated data is used.

⚠️ Risky AIMS Exclusions to Avoid

⚠ Excluding AI models that make significant financial, medical, or legal decisions. ⚠ Omitting high-risk AI applications subject to strict regulations (e.g., biometric authentication, predictive policing). ⚠ Failing to include AI security monitoring for models deployed in production environments.

5. Final Checklist for Defining AIMS Scope (ISO 42001)

✅ Identify AI models, decisions, and data sources that require governance.

✅ Map AIMS to business objectives and regulatory mandates.

✅ Document internal and external factors influencing AI governance.

✅ List all regulatory requirements affecting AI governance.

✅ Ensure cross-functional teams are involved in scope definition.

✅ Prepare an auditor-ready document detailing AIMS scope, exclusions, and justifications.

Why a Well-Defined AIMS Scope Matters

Defining a clear, well-structured AIMS scope ensures:

✅ Comprehensive AI governance coverage.

✅ Regulatory and compliance readiness.

✅ Mitigation of AI security, fairness, and ethical risks.

✅ Audit-friendly documentation for ISO 42001 certification.

Defining the Organisational Context of AIMS (AI Management System)

Why Organisational Context Matters in AI Governance Defining the organisational context of your AI Management System (AIMS) is essential for ensuring effective AI governance, risk management, compliance, and ethical deployment. ISO 42001 requires organisations to identify the internal and external factors, interested parties, dependencies, and interfaces that influence AI decision-making, security, fairness, and transparency.

Properly understanding and documenting your AIMS context ensures that AI systems align with business objectives, stakeholder expectations, and regulatory requirements.

1. Understanding Internal and External Issues in AI Governance (ISO 42001 Clause 4.1)

📌 ISO 42001 Clause 4.1 requires organisations to consider both internal and external factors that impact the governance and security of AI models, systems, and decision-making processes.

🔹 Internal Issues (Factors Under Direct Control)

Internal factors shape how AI governance and risk management are implemented within an organisation. These include:

- AI Governance & Ethics Policies – Internal AI compliance, bias mitigation frameworks, explainability requirements.

- Organisational Structure – AI risk management roles, responsibilities of AI governance teams, and leadership accountability.

- AI Model Capabilities & Security – AI robustness, adversarial resistance, explainability mechanisms.

- Data Governance & Management – Quality, lineage, and ethical sourcing of training data.

- AI System Lifecycle Controls – Policies governing AI development, deployment, monitoring, and decommissioning.

- Internal AI Stakeholders – AI engineers, compliance officers, data privacy teams, risk managers, legal advisors.

🔹 External Issues (Factors Outside Direct Control)

External factors influence AI governance, compliance risks, and legal responsibilities but are not directly controlled by the organisation. These include:

- Regulatory Landscape – Global AI regulations like the EU AI Act, GDPR, NIST AI RMF, industry-specific AI policies.

- Market & Industry Trends – Emerging AI risks, competitive pressures, AI explainability expectations.

- Ethical & Societal Expectations – Public concerns over bias, fairness, and AI-driven discrimination.

- AI Threat Environment – Rise of adversarial attacks, AI-driven fraud, misinformation risks.

- Third-Party Dependencies – External AI providers, API-based AI services, federated learning systems, cloud AI deployments.

🚀 Action: List the internal and external AI-related factors that influence your organisation’s AI Management System (AIMS).

💡 TIP: Consider global, national, and industry-specific AI regulations to ensure comprehensive compliance planning.

Identifying and Documenting AI-Related Stakeholders (ISO 42001 Clause 4.2)

📌 ISO 42001 Clause 4.2 requires organisations to define and document all interested parties that interact with or are impacted by AI systems.

AI governance affects a wide range of stakeholders, including internal teams, regulators, customers, and external AI vendors.

🔹 Internal AI Stakeholders

✅ AI Developers & Engineers – Responsible for AI training, testing, and monitoring.

✅ Data Privacy & Security Teams – Ensure AI compliance with GDPR, CCPA, EU AI Act.

✅ Compliance & Risk Officers – Oversee AI risk management and regulatory reporting.

✅ Executive Management – Ensure AI aligns with business strategy and risk appetite.

✅ IT & Infrastructure Teams – Manage AI security and infrastructure dependencies.

🔹 External AI Stakeholders

✅ Regulatory Authorities – EU AI Act enforcement bodies, data protection authorities (GDPR compliance).

✅ Customers & End-Users – Expect explainability, fairness, and security in AI decision-making.

✅ Third-Party AI Vendors – Cloud AI services, external ML model providers, AI API integrations.

✅ AI Ethics & Civil Rights Groups – Monitor AI fairness and potential bias risks.

✅ Investors & Business Partners – Require assurance that AI governance is in place to prevent reputational risks.

🚀 Action: For each stakeholder, document their specific AI-related compliance expectations, risks, and legal obligations.

💡 TIP: AI regulations evolve—regularly update your stakeholder list to reflect changing AI compliance expectations.

AI Regulation isn’t coming. It’s here. The only question is whether you’re ready for it.

- Mike Graham, ISMS.Online VP Partner Ecosystem

Mapping AI System Interfaces & Dependencies (ISO 42001 Clause 4.4)

📌 ISO 42001 Clause 4.4 requires organisations to define and document AI system interfaces and dependencies, ensuring that all AI-related interactions, security risks, and compliance gaps are covered.

🔹 Internal AI Interfaces

These represent the points of interaction within an organisation where AI governance, security, and compliance measures must be enforced.

✅ AI Decision-Making Workflows – How AI models integrate into business processes, automated decision-making pipelines.

✅ IT Security & Cybersecurity Teams – AI model security and protection against adversarial attacks.

✅ Data Privacy Teams – Ensuring data protection compliance for AI models handling PII (GDPR, CCPA, ISO 27701).

🔹 External AI Interfaces

External AI interfaces involve third-party services, cloud AI providers, and federated learning systems. These include:

✅ Third-Party AI API Integrations – AI-as-a-Service, cloud-based AI solutions, API-driven AI analytics.

✅ AI Model Supply Chain – Outsourced AI models, AI vendors providing pre-trained models.

✅ Regulatory & Compliance Reporting Systems – Interfaces for submitting AI audits, compliance reports.

🔹 AI System Dependencies

Dependencies represent critical AI resources that organisations must secure and manage for effective governance.

✅ Technological Dependencies: Cloud AI services, AI software platforms, federated learning networks.

✅ Data Dependencies: Datasets sourced from external providers, real-time data pipelines, customer analytics feeds.

✅ Human Resource Dependencies: AI model trainers, ethics review committees, compliance officers.

🚀 Action: List all internal/external AI system interfaces and dependencies to identify security and governance touchpoints.

💡 TIP: Conduct regular dependency audits to ensure that third-party AI integrations comply with security and fairness guidelines.

Checklist for Defining AI Organisational Context

📍 ISO 42001 Compliance Areas Covered:

✅ Clause 4.1 – Define internal/external AI governance factors.

✅ Clause 4.2 – Identify and document key AI stakeholders.

✅ Clause 4.3 – Clearly define AIMS scope, including included/excluded AI systems.

✅ Clause 4.4 – Map AI interfaces, dependencies, and third-party risks.

📌 Actionable Steps:

✅ Identify internal & external AI governance factors impacting your organisation.

✅ Document all AI stakeholders and their regulatory, legal, and ethical expectations.

✅ List AI interfaces (internal & external) and dependencies (data, technology, third-party AI providers).

✅ Maintain version-controlled documentation to ensure continuous AIMS compliance.

📌 Defining organisational context is the first critical step in ISO 42001 compliance. Without clear documentation of AI governance factors, stakeholders, and dependencies, organisations risk regulatory non-compliance, AI security vulnerabilities, and reputational harm..

Identifying Relevant AI Assets

To ensure a comprehensive AI Management System (AIMS) under ISO 42001, organisations must identify, categorise, and govern AI-related assets. AI assets include datasets, models, decision systems, regulatory requirements, and third-party integrations.

By classifying AI assets, organisations can identify potential risks, apply the right controls, and ensure compliance with AI governance regulations (ISO 42001 Clause 4.3 & 8.1)f.

🔹 AI Asset Categories (ISO 42001 Focused)

📌 Each asset type represents a critical area of AI governance, requiring dedicated security, risk, and compliance controls.

1️⃣ AI Model & Algorithmic Assets

- Machine learning models, deep learning neural networks

- Large language models (LLMs), generative AI models

- AI model parameters, hyperparameter tuning configurations

- Model training logs, versioning history

2️⃣ AI Data & Information Assets

- Training datasets (structured/unstructured, proprietary datasets)

- Real-time data feeds used in AI inference

- Data labelling, feature engineering datasets

- Customer, employee, or vendor-related data processed by AI

3️⃣ AI Infrastructure & Computational Resources

- Cloud-based AI environments (AWS AI, Azure AI, Google Vertex AI)

- On-premise AI servers, GPUs, TPUs, and computational clusters

- AI model deployment pipelines, MLOps frameworks

4️⃣ Software & AI Deployment Systems

- AI-powered enterprise applications (chatbots, automation tools, recommender systems)

- AI APIs and AI-as-a-service (external AI models used via API)

- AI orchestration platforms (Kubernetes for AI, model registries)

5️⃣ Personnel & Human-AI Decision-Making Assets

- AI governance committee, compliance officers, data scientists

- Human-in-the-loop (HITL) AI decision review processes

- AI ethics oversight boards

6️⃣ Third-Party & External AI Dependencies

- AI models sourced from third-party vendors (OpenAI, Google, Amazon, etc.)

- External cloud AI services & federated learning networks

- AI marketplaces, data partnerships, AI-powered SaaS applications

🚀 ACTION:

✅ Make a list of all AI-related assets under governance to facilitate risk management.

✅ Categorise internal vs. third-party AI models to assess security risks, bias, and compliance gaps.

💡 TIP: Consider additional AI-specific categories such as AI ethics policies, adversarial risk mitigation strategies, and compliance-focused AI monitoring tools.

Aligning AIMS Scope with Business Objectives (ISO 42001 Clause 5.2 & 6.1)

The AI governance framework must align with business strategy, risk tolerance, and regulatory expectations. AI is increasingly integrated into business operations, making it critical to define how AI risk management supports key business goals.

📌 Define AI Governance Objectives

Before implementing ISO 42001, organisations must establish their primary AI governance objectives:

✅ Ensuring AI compliance with global regulatory frameworks.

✅ Reducing AI-related risks (bias, explainability, adversarial attacks, security vulnerabilities).

✅ Aligning AI models with ethical, legal, and fairness requirements.

✅ Securing AI models from data poisoning, manipulation, or adversarial threats.

✅ Enhancing AI transparency by ensuring explainable, accountable decision-making.

📌 Key Business Considerations for AI Governance

🔹 How critical is AI to core business operations?

🔹 What are the financial, operational, and legal risks of AI failures?

🔹 How does AI compliance impact customer trust, legal liability, and market positioning?

Assessing AI Risks & Prioritising Governance Efforts (ISO 42001 Clause 6.1.2)

📌 Once AI objectives are defined, organisations must conduct an AI risk assessment and prioritise AI governance efforts accordingly.

Risk-Based Prioritisation:

✅ AI systems making high-risk decisions (financial risk scoring, hiring automation, healthcare diagnostics) require stronger governance and regulatory oversight.

✅ AI models handling sensitive personal data (biometric authentication, facial recognition) demand higher security controls (ISO 27701 alignment for privacy protection).

✅ AI-driven automation tools with low-risk exposure (chatbots, automated scheduling AI) may require less stringent but still auditable governance measures.

📌 Aligning AI Scope with Key Business Priorities

To ensure AI governance aligns with business objectives, organisations must:

1️⃣ Define AI Governance Priorities:

- Is the goal regulatory compliance? (Ensure AI meets GDPR, EU AI Act and other similar laws/regulations

- Is security the main concern? (Prevent AI adversarial attacks, data leaks, and unauthorised use)

- Is explainability required? (Improve AI decision-making transparency and accountability)

2️⃣ Assess AI Risk Tolerance:

- High-Risk AI: Medical AI, autonomous driving, predictive law enforcement, financial fraud detection

- Medium-Risk AI: AI-based hiring systems, AI-powered customer segmentation

- Low-Risk AI: AI-driven email filtering, AI chatbots for internal use

3️⃣ Align Third-Party AI Governance:

- Assess third-party AI vendor risks (e.g., OpenAI API models, Google AI services).

- Ensure external AI models meet governance policies before integration.

🚀 ACTION:

✅ Conduct a stakeholder meeting (executives, data scientists, compliance officers) to align AI objectives, risk priorities, and governance scope.

✅ Document all AI systems under governance and map AI risks to ISO 42001 Annex A AI controls.

💡 TIP: Regularly review AI governance alignment as regulations evolve (e.g., EU AI Act updates, changes in AI risk classifications).

AI Asset Mapping & Business Alignment

✅ Categorise AI-related assets (models, data, decision workflows, third-party tools).

✅ Define how AI governance aligns with security, risk, and compliance goals.

✅ Assess AI risk prioritisation based on model sensitivity and regulatory exposure.

✅ Identify and document AI dependencies (external vendors, cloud AI, federated AI systems).

✅ Map AI systems to ISO 42001 clauses to ensure compliance coverage.

Ensuring AI Governance Success

A well-defined AI asset inventory and governance alignment strategy enables organisations to:

✅ Mitigate AI security risks & prevent adversarial attacks.

✅ Ensure compliance with evolving global AI regulations (GDPR, EU AI Act, NIST AI RMF).

✅ Improve AI transparency, fairness, and ethical accountability.

✅ Align AI governance with business strategy, competitive advantage, and customer trust.

Practical Steps to Defining Your AI Management System (AIMS) Scope

Defining the scope of your AI Management System (AIMS) is a critical foundation for AI governance, security, compliance, and ethical responsibility under ISO 42001. A well-documented scope ensures that AI systems, risks, and stakeholders are properly managed, reducing regulatory non-compliance, AI security failures, and bias risks.

This section provides practical steps to establishing a well-structured AIMS scope, ensuring alignment with AI governance objectives, risk management strategies, and international AI regulations.

1. Compile AIMS Scoping Documentation (ISO 42001 Clauses 4.3 & 8.1)

AIMS scope documentation must include the following key components to clearly define governance responsibilities, AI risks, and compliance coverage:

📌 Scope Statement (ISO 42001 Clause 4.3 – Defining Scope)

- Defines what AI-driven processes, models, and decisions are included/excluded.

- Establishes the AI lifecycle stages under governance (development, deployment, monitoring).

- Specifies applicable AI regulations, security requirements, and ethical principles.

📌 Context of the Organisation (ISO 42001 Clause 4.1 – Organisational Context)

- Identifies internal and external factors affecting AI governance.

- Considers business objectives, industry-specific AI risks, and ethical responsibilities.

- Accounts for regulatory compliance obligations (EU AI Act, GDPR and standard e.g. ISO 27001/27701, etc.).

📌 Interested Parties and Their Requirements (ISO 42001 Clause 4.2 – Stakeholder Considerations)

- Identifies key internal and external AI stakeholders (AI teams, compliance officers, regulators, customers, third-party AI vendors).

- Documents their compliance expectations, ethical considerations, and legal obligations.

- Ensures governance alignment with AI risk management best practices.

📌 AI System Interfaces and Dependencies (ISO 42001 Clause 4.4 – AI System Interactions)

- Lists internal AI system interfaces (data pipelines, model repositories, security frameworks).

- Documents external AI dependencies (third-party AI vendors, federated learning networks, AI-as-a-service platforms).

- Establishes controls for AI security, model versioning, and explainability monitoring.

📌 AI Asset Inventory (ISO 42001 Clause 8.1 – AI System Classification)

- Detailed list of AI models, training datasets, real-time AI data feeds, inference engines, and deployment environments.

- Includes AI-powered decision-making systems, autonomous systems, and generative AI applications.

- Covers data governance policies for AI datasets, ensuring compliance with privacy laws (GDPR, CCPA).

2. Supporting Documentation for AI Governance Scope

To ensure audit readiness and compliance transparency, organisations should maintain supporting documentation as part of their AIMS scope.

📌 Risk Assessment & Treatment Documentation (ISO 42001 Clause 6.1.2 – AI Risk Assessment)

- Identifies AI-related risks (bias, adversarial attacks, model drift, data poisoning).

- Defines AI security mitigation strategies (explainability, fairness, adversarial defence).

📌 AI Governance Structure Diagram (ISO 42001 Clause 5.2 – AI Leadership & Governance Roles)

- Maps AI compliance officers, AI risk managers, model developers, and security teams.

- Ensures AI governance accountability across all AI lifecycle stages.

📌 AI Process & Workflow Documentation (ISO 42001 Clause 8.3 – AI Lifecycle Controls)

- Details AI model development pipelines, monitoring frameworks, and compliance checkpoints.

- Establishes explainability and accountability mechanisms for high-risk AI models.

📌 Network & AI System Architecture Diagram (ISO 42001 Clause 8.1 – AI System Controls)

- Visual representation of AI models, APIs, cloud AI deployments, and data flows.

- Identifies AI model storage, security perimeters, and access control policies.

📌 Regulatory & Legal Documentation (ISO 42001 Clause 5.3 – Compliance Requirements)

- Includes GDPR compliance policies for AI handling personal data.

- Documents AI security policies aligned with the NIST AI RMF and AI Act requirements.

📌 Third-Party AI Vendor & Supplier Documentation (ISO 42001 Clause 8.2 – AI Supply Chain Risk Management)

- Includes contracts, risk assessments, and security audits for third-party AI providers.

- Ensures third-party AI models comply with AI governance policies before deployment.

3. Actionable Steps for AI Governance Teams

🚀 Step 1: Develop an AIMS Scope Statement

✅ Clearly define which AI systems, decisions, and data sources fall under AIMS governance.

✅ Specify AI lifecycle coverage (training, deployment, monitoring, decommissioning).

✅ Justify any AI system exclusions with risk assessments.

🚀 Step 2: Map AI Stakeholders & Compliance Responsibilities

✅ Identify internal teams managing AI governance (compliance officers, data scientists, risk managers).

✅ List external stakeholders (regulators, customers, auditors, AI ethics groups).

✅ Ensure stakeholders’ compliance and AI risk mitigation expectations are documented.

🚀 Step 3: Conduct an AI Risk Assessment

✅ Identify AI risks (bias, adversarial threats, explainability gaps, regulatory exposure).

✅ Align AI risk treatment strategies with ISO 42001 Annex A AI controls.

✅ Document risk treatment plans and security mitigations.

🚀 Step 4: Document AI System Interfaces & Dependencies

✅ List internal AI model repositories, data pipelines, and inference engines.

✅ Identify third-party AI vendors, cloud AI services, and API integrations.

✅ Implement security policies for external AI interactions.

🚀 Step 5: Maintain AI Compliance & Audit Documentation

✅ Establish version-controlled AI governance policies.

✅ Prepare for ISO 42001 certification audits by ensuring traceability of AI decisions, risk assessments, and security controls.

✅ Continuously update governance scope documentation as AI regulations evolve.

4. Final Checklist for Defining AIMS Scope (ISO 42001)

✅ Define scope statement (AI lifecycle coverage, compliance obligations, exclusions).

✅ List internal/external AI governance factors (regulatory, ethical, security risks).

✅ Identify all AI models, datasets, and decision-making systems in scope.

✅ Document AI stakeholders’ compliance expectations.

✅ Establish AI system interfaces, security perimeters, and dependency controls.

✅ Maintain a structured AI risk assessment and compliance report.

Why a Well-Defined AIMS Scope is Essential

A properly documented AIMS scope ensures:

✅ Regulatory compliance with global AI laws (EU AI Act, GDPR, ISO 42001, NIST AI RMF).

✅ Mitigation of AI-specific risks (bias, adversarial attacks, explainability gaps).

✅ Alignment of AI governance with business objectives and ethical responsibilities.

✅ Audit-ready documentation for ISO 42001 certification.

Consulting with Key Stakeholders & Avoiding Pitfalls in AI Management System (AIMS) Scope Definition

The implementation of an AI Management System (AIMS) under ISO 42001 requires cross-functional collaboration between executives, AI engineers, compliance teams, legal experts, and external stakeholders. Involving the right decision-makers early ensures that AI governance aligns with business strategy, regulatory requirements, security controls, and ethical AI deployment.

Consulting with Key Stakeholders (ISO 42001 Clause 4.2 & 5.2)

📌 Stakeholder involvement is essential for successful AI governance, ensuring that all risks, regulatory requirements, and ethical concerns are addressed throughout the AI lifecycle.

🔹 Why Stakeholder Engagement is Critical for AIMS

- Ensures that AI governance is aligned with business objectives and organisational strategy.

- Helps identify AI-specific risks, biases, security concerns, and regulatory compliance obligations.

- Encourages early buy-in from executives, compliance teams, and technical teams, reducing resistance to AI governance controls.

- Improves risk management strategies by incorporating insights from legal, security, and operational teams.

- Enables continuous adaptation of AIMS scope as AI regulations and risks evolve.

🔹 Key Stakeholders in AIMS Implementation

✅ Executive Leadership – Provides strategic direction, funding, and resource allocation.

✅ AI & Machine Learning Teams – Manage AI model development, deployment, monitoring, and security.

✅ Data Governance & Privacy Teams – Ensure compliance with GDPR, AI Act, ISO 27701 regarding AI-driven data processing.

✅ Legal & Compliance Officers – Identify legal obligations, mitigate AI-related liabilities, and oversee regulatory compliance.

✅ IT & Cybersecurity Teams – Secure AI infrastructure, prevent adversarial AI attacks, and implement security controls.

✅ Human-AI Interaction Specialists – Address concerns related to AI explainability, fairness, and bias mitigation.

✅ External Regulatory & Industry Bodies – Ensure AI systems meet industry-specific and government AI regulations.

🚀 Actionable Steps:

✅ Host stakeholder meetings to define AIMS priorities and discuss AI governance responsibilities.

✅ Assign ownership for AI compliance, risk management, and security within different teams.

✅ Conduct stakeholder interviews to identify AI risks, ethical concerns, and business needs.

💡 TIP: Maintain ongoing stakeholder engagement by scheduling regular AI governance reviews, keeping teams aligned as AI regulations evolve.

2. Avoiding Common Pitfalls When Defining AIMS Scope (ISO 42001 Clause 4.3)

📌 A poorly defined AIMS scope can lead to compliance failures, security risks, and misalignment with business goals. Below are key pitfalls to avoid during the scoping process.

🔹 Defining an AIMS Scope That is Too Broad or Too Narrow

🚫 Overly Broad Scope:

- Trying to govern all AI-driven processes without prioritisation can overwhelm resources.

- Leads to unmanageable AI risk controls, excessive costs, and compliance inefficiencies.

🚫 Overly Narrow Scope:

- Excluding critical AI applications in high-risk areas (finance, healthcare, automated decision-making) creates compliance blind spots.

- Ignores AI governance gaps in external AI model dependencies or third-party AI integrations.

✅ Best Practice:

📌 Prioritise AI governance based on AI risk levels (e.g., high-risk AI in medical, legal, or financial decisions should be a top priority).

📌 Focus on AI models that significantly impact users, customers, or regulatory compliance.

🔹 Failing to Engage Key AI Stakeholders

🚫 Excluding compliance, IT, or legal teams from the AIMS planning process results in:

- Regulatory misalignment – Missing legal obligations under GDPR, AI Act, or NIST AI RMF.

- Security gaps – AI systems lack cybersecurity controls, increasing adversarial attack risks.

- Ineffective risk management – AI bias, model drift, and ethical concerns go unaddressed.

✅ Best Practice:

📌 Form a cross-functional AI governance committee to oversee AIMS implementation.

📌 Ensure all AI risk owners (legal, compliance, security, data science) contribute to AIMS scope definition.

🔹 Overlooking Legal & Regulatory Requirements (ISO 42001 Clause 5.3)

🚫 Not accounting for AI laws and regulations leads to non-compliance risks, including:

- GDPR violations due to improper AI-based data processing.

- AI Act penalties for high-risk AI applications failing transparency requirements.

- Failure to meet explainability and fairness requirements in AI-driven decision-making.

✅ Best Practice:

📌 Map ISO 42001 requirements to applicable AI regulations (GDPR, AI Act, ISO 27701, NIST AI RMF).

📌 Ensure AI governance policies explicitly define compliance obligations.

🔹 Excluding Critical AI Information & Assets

🚫 Not identifying and documenting AI-related assets can lead to governance blind spots.

- AI models may lack explainability tracking.

- Training datasets may not have bias mitigation controls.

- AI decisions may not be auditable, violating regulatory requirements.

✅ Best Practice:

📌 Create an AI asset inventory listing models, datasets, and decision workflows covered by AIMS.

📌 Document AI model lifecycle phases to ensure security, fairness, and compliance.

🔹 Underestimating AI Resource & Budget Needs (ISO 42001 Clause 9.3)

🚫 Failing to allocate resources for AI governance leads to:

- Unmonitored AI risks (bias, security, adversarial attacks).

- Incomplete compliance processes, increasing legal exposure.

- Lack of AI governance personnel, resulting in regulatory violations.

✅ Best Practice:

📌 Define AI compliance budget needs upfront (e.g., risk assessments, AI audits, third-party compliance tools).

📌 Ensure leadership supports long-term AI governance investment.

Checklist for AIMS Stakeholder Engagement & Scope Definition

📍 Key ISO 42001 Clauses Addressed:

✅ Clause 4.2 – Define key AI stakeholders and their governance roles.

✅ Clause 4.3 – Establish scope boundaries, listing included/excluded AI systems.

✅ Clause 5.2 – Align AI governance with organisational strategy.

✅ Clause 5.3 – Ensure compliance with AI regulations and ethical frameworks.

✅ Clause 9.3 – Allocate necessary resources for AI risk management and compliance.

📌 Actionable Steps for AI Governance Teams:

✅ Conduct a stakeholder analysis to define roles and responsibilities.

✅ Ensure AI risk management is aligned with compliance regulations.

✅ Document AI assets, decision workflows, and security dependencies.

✅ Allocate necessary funding and personnel for long-term AI compliance.

Why AI Stakeholder Engagement & Scope Definition Matter

📌 A well-defined AI governance scope ensures organisations:

✅ Avoid compliance risks with GDPR, AI Act, ISO 42001, and NIST AI RMF.

✅ Effectively manage AI security risks, adversarial threats, and bias mitigation.

✅ Align AI governance with business strategy, ethics, and transparency expectations.

✅ Ensure cross-functional teams support AI governance for long-term sustainability.

Building Out AI Risk Management Functionality

(A Tactical Approach to AI Risk Governance and Security)

Artificial Intelligence introduces a unique set of risks—far removed from traditional information security threats. Organisations deploying AI systems must account for bias, model drift, adversarial manipulation, and opaque decision-making—all of which can lead to regulatory violations, security breaches, or reputational damage.

Unlike conventional IT risk management frameworks, AI risk assessment demands continuous oversight, adversarial testing, and bias mitigation strategies. Clause 6.1.2 of ISO 42001 mandates a structured, risk-based governance model, requiring organisations to identify, categorise, and remediate AI vulnerabilities spanning data integrity, algorithmic security, and compliance gaps.

Defining AI Risk Categories

To build an effective AI risk management framework, organisations must first establish a precise classification of AI-specific risks:

1. Bias & Fairness Risks

- Algorithmic bias: AI models trained on imbalanced data sets may produce discriminatory outcomes, leading to regulatory penalties (GDPR, AI Act).

- Data set contamination: Inaccurate, incomplete, or non-representative training data can reinforce systemic inequalities.

- Fairness drift: Over time, AI models may degrade, amplifying bias as real-world data shifts.

2. AI Security & Adversarial Risks

- Data poisoning: Attackers manipulate training data to influence AI predictions.

- Adversarial inputs: Maliciously crafted data points deceive AI models, causing misclassification or incorrect decisions.

- Model inversion attacks: Threat actors extract sensitive training data by probing AI models.

3. Explainability & Compliance Risks

- Opaque decision-making: Black-box models lack explainability, violating AI Act and ISO 42001 transparency requirements.

- Regulatory non-compliance: AI decisions affecting finance, healthcare, and hiring must be auditable and legally defensible.

- Lack of human oversight: Unchecked automation in high-stakes applications (e.g., credit scoring, fraud detection) can escalate liability.

4. Data Integrity & Privacy Risks

- Personally Identifiable Information (PII) exposure: AI models trained on personal data must comply with ISO 27701 and GDPR mandates.

- Shadow AI models: Unmonitored AI deployments introduce compliance risks, often lacking security governance.

ISO 42001 follows a risk-based AI governance approach, meaning that identifying AI-related risks is essential in determining which AI controls, security measures, and monitoring mechanisms should be implemented.

📌 ISO 42001 Clause 6.1.2 relates to the process of identifying AI risks and conducting AI risk assessments. This clause requires organisations to identify risks to AI transparency, fairness, security, and compliance that could arise from data sources, algorithms, adversarial threats, and regulatory misalignment.

AI Risk Assessment Methodology (ISO 42001 Clause 6.1.2 & 8.2)

(A Strategic Approach to AI Governance, Security, and Compliance)

Artificial Intelligence presents a dynamic and evolving risk landscape that diverges significantly from traditional cybersecurity threats. While conventional IT systems rely on static controls, AI models introduce algorithmic bias, adversarial vulnerabilities, model drift, and explainability failures—each of which can have serious legal, ethical, and security implications.

ISO 42001 mandates a structured risk management framework, ensuring organisations proactively identify, evaluate, and mitigate AI risks across their AI Management System (AIMS). This process demands a risk-based governance model, leveraging continuous assessment, adversarial testing, and compliance-driven oversight to safeguard AI operations from regulatory violations, security breaches, and reputational fallout.

Key Objectives of AI Risk Assessment

To establish a resilient AI governance framework, organisations must:

✅ Identify and categorise AI-specific risks—including bias, adversarial attacks, security vulnerabilities, explainability failures, and regulatory non-compliance.

✅ Assign clear risk ownership to compliance officers, security teams, and data scientists, ensuring accountability.

✅ Implement a standardised AI risk scoring methodology, prioritising mitigation based on severity and potential business impact.

✅ Define AI risk thresholds and escalation triggers, determining when intervention, retraining, or decommissioning is required.

AI Risk Assessment Framework

Step 1: Identifying AI Risks Across Models & Systems

AI risk management begins with a systematic mapping of vulnerabilities, ensuring that risks are identified at every stage of the AI lifecycle. Some key AI-specific risks include:

🔹 Bias & Fairness Risks

- Algorithmic bias: Training data imbalances leading to discriminatory outcomes, violating regulatory standards (GDPR, AI Act).

- Data set contamination: Poorly curated training datasets introducing systemic discrimination.

- Model fairness drift: Degradation of fairness metrics over time as data distributions shift.

🔹 Explainability & Transparency Risks

- Opaque AI models: Black-box algorithms producing decisions that lack interpretability, violating compliance mandates (ISO 42001, GDPR).

- Auditability failures: AI decisions that cannot be reconstructed or justified to auditors.

- Regulatory non-compliance: Lack of AI documentation for sensitive applications in finance, healthcare, and legal industries.

🔹 Security & Adversarial Risks

- Adversarial attacks: Maliciously crafted inputs misleading AI models (e.g., evading fraud detection systems).

- Data poisoning: Attackers injecting manipulated data into AI training sets, skewing outcomes.

- Model inversion threats: Exploiting AI responses to extract sensitive training data.

🔹 AI Model Drift & Performance Risks

- Concept drift: AI models producing increasingly inaccurate predictions as underlying data patterns evolve.

- Unmonitored model degradation: AI systems operating beyond their intended lifespan without recalibration.

- Retraining gaps: Failure to update AI models with fresh, unbiased data sources.

🔹 Compliance & Regulatory Risks

- Personal data exposure: AI models inadvertently processing or inferring sensitive PII, breaching ISO 27701 and GDPR mandates.

- Shadow AI deployments: Unvetted AI applications operating outside organisational oversight, increasing liability.

- High-stakes automation risks: AI-driven decisions in finance, healthcare, or legal contexts that lack human oversight, leading to ethical concerns and regulatory scrutiny.

🚀 Actionable Step: Develop a risk register mapping AI models to governance, security, and compliance risks, ensuring real-time oversight.

Assigning AI Risk Ownership (ISO 42001 Clause 6.1.3)

AI governance demands clear accountability structures—without designated risk owners, AI failures can go undetected until they escalate into legal, financial, or reputational crises.

🔹 How to Assign AI Risk Ownership

✅ Map AI risks to business units—HR, finance, security, healthcare, legal teams, and compliance officers.

✅ Define clear governance roles—AI risk owners must have the authority to enforce governance controls and intervene when risks escalate.

✅ Ensure cross-functional oversight—collaboration between AI engineers, data privacy officers, and risk managers is critical for effective mitigation.

🚀 Actionable Step: Document AI risk ownership responsibilities within governance policies, ensuring transparency and accountability in risk treatment.

AI Risk Scoring & Categorization (ISO 42001 Clause 6.1.2)

A quantitative risk assessment model enables organisations to prioritise AI vulnerabilities, ensuring that high-impact threats receive immediate attention.

🔹 AI Risk Calculation Methodology

AI risks should be evaluated based on likelihood and impact, ensuring a structured prioritisation model:

a) Determine Risk Likelihood

- How frequently could an AI risk materialise?

- How vulnerable is the AI model to adversarial threats or bias contamination?

- What is the historical frequency of AI-related compliance violations?

📌 Risk Likelihood Scale (1 – 10): 1️⃣ Very Low – Rarely occurs. 🔟 Very High – Almost certain to happen.

b) Evaluate Risk Impact

- What are the financial, legal, and reputational consequences if the AI model fails?

- Would AI misclassification result in regulatory fines, lawsuits, or compliance failures?

- Could AI-driven bias lead to public backlash or reputational harm?

📌 Risk Impact Scale (1 – 10): 1️⃣ Very Low – Minimal consequences. 🔟 Catastrophic Impact – Severe financial, legal, or reputational damage.

c) Calculate AI Risk Score

📌 Formula: 📌 Risk Score = Likelihood × Impact

| AI Risk Level | Risk Score Range | Required Actions |

| High Risk | 70 – 100 | Immediate mitigation required. |

| Medium Risk | 40 – 69 | Ongoing monitoring and adjustments. |

| Low Risk | 1 – 39 | Periodic risk review. |

🚀 Actionable Step: Implement a real-time AI risk matrix, scoring AI threats based on likelihood and impact to ensure proactive governance.

Defining AI Risk Tolerance & Mitigation Strategies (ISO 42001 Clause 6.1.4)

Every AI model operates within an acceptable risk threshold—exceeding that threshold requires immediate intervention.

🔹 Establishing AI Risk Tolerance

✅ High-risk AI applications (e.g., autonomous medical diagnosis, financial fraud detection) require continuous monitoring and regulatory compliance oversight.

✅ Medium-risk AI models (e.g., AI-driven recruitment, customer profiling) necessitate periodic audits and fairness testing.

✅ Low-risk AI implementations (e.g., AI chatbots, email filtering) demand minimal governance interventions.

🚀 Actionable Step: Define AI risk governance policies—outlining when AI models require modification, retraining, or decommissioning.

Key Takeaways

- AI risk assessment must be continuous—AI threats evolve rapidly; governance frameworks must be proactive.

- Regulatory compliance is non-negotiable—AI-driven decisions must align with GDPR, ISO 27701, and ISO 42001 mandates.

- AI models must be auditable & explainable—ensuring transparency, fairness, and accountability is critical for AI credibility.

- Security & bias mitigation go hand-in-hand—defensive adversarial testing and fairness audits must be integral to AI risk frameworks.

Conducting AI Risk Assessments (ISO 42001 Clause 8.2)

Ensuring robust AI governance demands a systematic, data-driven risk assessment framework that identifies vulnerabilities before they escalate into compliance failures or security breaches. ISO 42001 Clause 8.2 mandates a structured approach to AI risk assessments, emphasising continuous monitoring, forensic analysis, and regulatory alignment.

Key Data Sources for AI Risk Evaluation

1. AI Stakeholder Intelligence

Interviews with internal stakeholders—AI engineers, compliance officers, cybersecurity teams, and legal advisors—help uncover systemic vulnerabilities.

- Identify risk factors related to model transparency, bias, and explainability.

- Cross-reference stakeholder concerns with existing governance policies.

- Correlate insights with operational failures to detect latent security gaps.

2. AI Security Stress Testing (ISO 42001 Clause 8.3.2 – Adversarial Risk Mitigation)

Rigorous security testing is fundamental to assessing an AI model’s resilience against cyber threats and manipulation.

- Conduct penetration testing to simulate real-world adversarial attacks.

- Use data poisoning simulations to evaluate AI model susceptibility.

- Apply adversarial input testing to measure exploit vulnerabilities in inference pipelines.

3. AI Risk Profiling via Forensic Document Review

A forensic analysis of AI governance documents ensures compliance with international standards.

- Audit risk registers and previous incident reports for recurring patterns.

- Validate AI model audit trails against ISO 42001 and GDPR transparency requirements.

- Review security controls against AI Act compliance benchmarks.

4. Regulatory & Legal Compliance Analysis (ISO 42001 Clause 5.3)

Failure to align AI governance frameworks with legal mandates invites litigation and reputational damage.

- Map AI security policies to GDPR, NIST AI RMF, and EU AI Act regulations.

- Identify gaps in data protection, accountability, and transparency.

- Evaluate AI decision logic against explainability thresholds mandated by regulators.

5. AI Risk Exposure in Supply Chains (ISO 42001 Clause 8.2.2)

Third-party AI models introduce unverified security and compliance risks, often exploited via API integrations.

- Conduct security audits of external AI vendors.

- Validate model lineage to ensure training datasets comply with privacy laws.

- Implement automated compliance tracking for third-party AI dependencies.

6. AI Bias & Fairness Vulnerability Analysis

Unchecked bias in AI models can lead to legal liabilities, discriminatory outcomes, and ethical violations.

- Apply statistical bias detection algorithms to audit model fairness.

- Implement multi-phase bias mitigation strategies from data preprocessing to model training.

- Perform impact assessments on AI decisions affecting high-risk domains like finance, healthcare, and law enforcement.

7. AI Governance Gap Analysis (ISO 42001 Clause 9.2)

A proactive approach to governance ensures AI risk mitigation aligns with regulatory expectations.

- Cross-check current AI governance policies against ISO 42001 control frameworks.

- Identify weak spots in AI risk assessments, compliance reporting, and security policies.

- Benchmark AI risk exposure against industry-specific AI risk matrices.

8. AI Incident Response & Anomaly Detection

AI failures must be anticipated and addressed through real-time anomaly detection and forensic investigation.

- Maintain historical AI incident records to track failure trends.

- Deploy anomaly detection systems to flag deviations from expected AI behaviour.

- Develop root-cause analysis workflows for investigating governance breakdowns.

9. AI Business Impact Assessment

AI governance isn’t just about compliance—it’s about operational resilience.

- Quantify financial risks of AI-driven decision failures.

- Assess legal exposure from biassed AI models.

- Calculate the cost of regulatory non-compliance and potential fines.

10. Actionable Next Steps

🔹 Implement an AI risk intelligence dashboard to track governance risks in real time.

🔹 Establish a continuous AI audit cycle for dynamic risk detection.

🔹 Automate compliance alerts to flag governance deviations before regulatory infractions occur.

The Bottom Line

AI governance demands a proactive, forensic, and legally fortified risk assessment approach. By embedding these strategies into your AI Management System (AIMS), you safeguard your organisation against regulatory penalties, security threats, and reputational damage.

AI Risk Categorization & Prioritisation (ISO 42001 Clause 6.1.4)

AI risk assessment isn’t just a compliance checkbox—it’s a strategic necessity. Effective categorization and prioritisation ensure governance teams focus on the most pressing threats while balancing risk tolerance with business continuity.

Breaking Down AI Risk Categories

AI risks must be evaluated based on severity, impact, and the level of intervention required. Misclassification leads to blind spots in governance, increasing exposure to regulatory penalties and security failures.

| Risk Level | Examples | Mitigation Strategy |

| High Risk | AI models affecting human rights, finance, legal decisions, or healthcare outcomes. | Immediate intervention required. Implement real-time monitoring, enforce strict regulatory compliance, and introduce fail-safes for human oversight. |

| Medium Risk | AI systems introducing moderate security vulnerabilities, such as access control loopholes or adversarial susceptibility. | Ongoing risk assessments and policy adjustments to detect and mitigate threats before escalation. |

| Low Risk | AI-driven automation with minimal legal, financial, or ethical consequences. | Document the risk acceptance rationale, monitor system behaviour, and reassess periodically. |

Strategic AI Risk Prioritisation

Failure to prioritise AI risks correctly can lead to cascading security failures and non-compliance. ISO 42001 requires risk visualisation and tracking mechanisms to ensure governance teams allocate resources effectively.

🔹 Actionable Strategy: Deploy a real-time AI risk heat map to visualise governance gaps, highlight emerging security concerns, and assess compliance risk zones dynamically.

Best Practices for AI Risk Management (ISO 42001 Compliance)

Key Risk Mitigation Strategies

Effective AI risk management is a continual process of monitoring, auditing, and adaptation. Organisations should implement:

- Automated AI Risk Monitoring → Deploy tools that track bias, model drift, and security anomalies in real time.

- Frequent AI Audits → Conduct regular compliance reviews aligned with GDPR, AI Act, and ISO 42001 standards to ensure AI governance remains airtight.

- Version-Controlled Documentation → Maintain a comprehensive AI risk register with historical governance decisions, model changes, and risk treatment records.

- Human-in-the-Loop (HITL) Governance → Implement manual oversight mechanisms in AI decision workflows where automation risks ethical violations.

AI Risk Governance Compliance Checklist (ISO 42001 Certification-Ready)

✅ Define AI risk acceptance criteria based on security, ethics, and regulatory obligations.

✅ Conduct bias detection and security stress-testing to preempt compliance failures.

✅ Categorise AI risks based on severity and mitigation urgency for focused governance.

✅ Automate real-time AI risk tracking to prevent compliance drift.

✅ Ensure audit readiness for AI risk documentation, governance logs, and policy enforcement.

AI Risk Treatment & Governance Under ISO 42001:2023

Once an organisation has completed an AI risk assessment (ISO 42001 Clause 6.1.2 & 8.2), the next step is executing an effective risk treatment strategy. AI risks evolve over time, demanding an ongoing, adaptive governance framework.

Four AI Risk Treatment Strategies (ISO 42001 Clause 6.1.4 & Annex A Controls)

1️⃣ Decreasing AI Risk (Proactive Mitigation Approach)

- Risk Type: AI bias, adversarial threats, regulatory violations.

- Mitigation Strategy:

- Implement bias audits to assess AI fairness (ISO 42001 Clause 8.2.3 – Bias Mitigation).

- Enhance explainability frameworks to improve AI decision transparency (ISO 42001 Clause 9.1 – AI Explainability Testing).

- Use adversarial stress testing to detect vulnerabilities before exploitation (ISO 42001 Clause 8.3.2 – Security Controls for AI).

- Establish AI incident response protocols for compliance breaches (ISO 42001 Clause 10.1 – Incident Handling).

2️⃣ Avoiding AI Risk (Eliminating the Source of Harm)

- Risk Type: High-risk AI applications where mitigation isn’t feasible.

- Example: A predictive policing system disproportionately impacting marginalised communities.

- Risk Treatment:

- Decision: Discontinue AI-driven policing models, replacing them with human-supervised decision systems.

- Outcome: Avoids legal exposure under GDPR, AI Act, and civil rights laws.

3️⃣ Transferring AI Risk (Outsourcing Governance Responsibilities)

- Risk Type: High-cost AI security risks beyond internal management capacity.

- Example: A financial institution’s AI fraud detection system requiring stringent security oversight.

- Risk Treatment:

- Purchase cyber insurance against AI-related security failures (ISO 42001 Clause 6.1.3 – AI Risk Treatment Plans).

- Mandate third-party AI security audits for external vendors (ISO 42001 Clause 8.2.2 – External AI Vendor Risk Management).

- Require AI providers to comply with ISO 27001 and SOC 2 standards under strict governance SLAs (ISO 42001 Clause 5.3 – AI Compliance Responsibilities).

4️⃣ Accepting AI Risk (Documenting Risk Acceptance & Monitoring)

- Risk Type: Low-impact AI risks where mitigation costs outweigh the consequences.

- Example: AI-driven product recommendations in e-commerce experiencing minor accuracy drift.

- Risk Treatment:

- Decision: Accept the AI model drift since its impact is negligible.

- Justification: Frequent model updates are costly and unnecessary.

- Monitoring: Implement quarterly AI performance evaluations to ensure drift remains within acceptable limits.

🚀 Governance Best Practice: AI risk treatment plans must be documented, reviewed periodically, and aligned with evolving AI regulations.

Embedding AI Risk Management into Daily Operations

Managing AI risk isn’t a one-time checkbox—it’s an ongoing, evolving effort. Threat actors constantly probe machine learning (ML) models for weaknesses, while regulatory bodies tighten compliance demands. Organisations must build AI risk management directly into their operational DNA, ensuring threats are identified and mitigated before they escalate.

Operationalizing AI Risk Management

AI risk mitigation must be a dynamic process woven into governance frameworks, regulatory reporting, and daily decision-making.

- Foster a Risk-Aware AI Culture AI engineers, data scientists, and security professionals must be trained to recognise vulnerabilities such as adversarial inputs, model drift, and algorithmic bias. Regular security drills and cross-functional risk assessments ensure teams remain prepared for evolving threats.

- Automate AI Risk Detection and Response Deploy AI governance platforms like IBM AI Explainability 360 and OpenRisk to continuously monitor for anomalies, unauthorised access, and compliance deviations. Automated alerts must trigger immediate investigations, reducing response time to potential model compromise.

- Cross-Departmental Risk Coordination AI risk isn’t confined to a single team. It impacts legal, IT security, HR, marketing, and compliance functions. Establish an AI risk oversight board to coordinate mitigation strategies, ensuring every department plays its role in governance and response.

🚀 Best Practice: AI security must be a proactive, embedded function—reactive risk management only ensures costly failures.

AI Risk Treatment Scenarios in Real-World Applications

AI models now make critical decisions in finance, healthcare, law enforcement, and national security. When risks are ignored, the consequences can be catastrophic. Organisations must implement robust controls to mitigate these threats.

Securing AI in Cloud Environments

Cloud-hosted AI models are prime targets for data poisoning, adversarial ML attacks, and API exploitation.

✅️ Implement end-to-end encryption, federated learning, and network segmentation to isolate AI workloads from unauthorised access.

✅️ Conduct continuous penetration testing on AI models to simulate attacks and strengthen defences.

✅️ Enforce ISO 42001-compliant AI security controls, ensuring AI processing aligns with recognised governance standards.

Preventing AI Model Drift in Healthcare

Inaccurate AI-driven diagnoses can cost lives. AI models used in medical applications must undergo continuous validation and fairness testing.

✅️ Apply real-time drift detection algorithms to ensure AI outputs remain aligned with current medical knowledge.

✅️ Conduct bias audits on training datasets to prevent demographic or systemic unfairness.

✅️ Implement ISO 42001 Clause 9.2 AI Performance Monitoring to enforce compliance and ensure the accuracy of AI-assisted diagnoses.

Mitigating AI Bias in Financial Services

Financial AI models influence credit approvals, insurance policies, and risk assessments. Bias in these systems can result in discriminatory lending, legal challenges, and severe reputational damage.

✅️ Use explainability models to detect unfair weightings in AI-driven decisions.

✅️ Ensure AI bias mitigation frameworks meet compliance with ISO 42001 and GDPR fairness principles.

✅️ Mandate periodic AI model retraining with diverse datasets to reduce historical biases.

🚀 Best Practice: AI governance must be tailored to specific industry risks—financial AI failures can trigger lawsuits, while healthcare AI errors can be lethal.

AI Risk Treatment Framework for ISO 42001 Compliance

AI risk treatment is a structured, multi-layered approach designed to eliminate vulnerabilities, ensure compliance, and enhance AI integrity.

AI Risk Treatment Strategies

✅ Prioritise High-Risk AI Models – AI systems influencing finance, law enforcement, and healthcare require the highest level of scrutiny.

✅ Align AI Risk Management with Regulations – Ensure risk treatments comply with GDPR, ISO 42001, NIST AI RMF, and other global AI governance frameworks.

✅ Implement Real-Time AI Risk Monitoring – AI vulnerabilities evolve—continuous monitoring is mandatory to prevent compliance drift.

✅ Establish AI Risk Retention and Transfer Policies – Define whether an organisation absorbs AI-related risks or shifts responsibility through insurance and legal frameworks.

✅ Enforce Continuous AI Risk Audits – Regular audits validate AI model security, fairness, and reliability.

Why AI Risk Treatment is Non-Negotiable

Ignoring AI risks isn’t an option. AI-driven decisions now impact millions of people across industries, and failures carry severe regulatory and financial penalties.

✅ Regulatory Compliance – Non-compliance with GDPR, ISO 42001, or AI transparency laws can result in multimillion-dollar fines.

✅ Security Vulnerabilities – Weak AI governance exposes models to adversarial attacks, leading to compromised decision-making and reputational damage.

✅ Fairness & Explainability – AI must be transparent, explainable, and unbiased—failure to meet these requirements will result in legal challenges and public backlash.

✅ Proactive Risk Mitigation – Treat AI risk management as a continuous process, not a one-time fix. Organisations that fail to do so will be playing catch-up in a landscape of evolving threats.

🚀 Best Practice: AI risk treatment isn’t just about compliance—it’s about trust, resilience, and ethical AI deployment.

Internal AI Audits (ISO 42001:2023)

AI systems are increasingly integral to decision-making in security, finance, and healthcare. However, without rigorous internal audits, organisations risk compliance failures, adversarial manipulation, and model bias. Internal AI audits under ISO 42001:2023 serve as a preemptive measure—ensuring governance frameworks are sound before regulators impose penalties.

Understanding Internal AIMS Audits

An Internal AI Management System (AIMS) audit is an independent evaluation of an organisation’s AI governance framework. It determines whether AI risk management, security controls, bias mitigation, and compliance mechanisms align with ISO 42001 and other regulatory mandates.

Key Considerations:

- Conducted by internal auditors or independent AI governance experts.

- Evaluates AI security, fairness, transparency, and compliance frameworks.

- Detects non-conformities before regulatory inspections.

- Prevents adversarial exploits and systemic AI biases.

🚀 Best Practice: ISO 42001 Clause 9.2 mandates structured internal audits, requiring periodic evaluations to ensure AI systems remain transparent, accountable, and resilient against emerging threats.

Core Requirements for Internal AI Audits (ISO 42001 Clause 9.2)

A comprehensive AI audit programme should be structured, impartial, and designed to detect vulnerabilities before they escalate.

Essential Audit Protocols

🔹 Audit Programme Development

✅ Design an annual or semi-annual audit plan, ensuring compliance with ISO 42001 AI governance requirements.

✅ Define audit scope, focusing on bias detection, adversarial resilience, and explainability.

✅ Ensure risk-based prioritisation—high-impact AI systems (finance, law enforcement, healthcare) require stricter compliance reviews.

🔹 Impartiality & Auditor Independence

✅ Auditors must operate independently—those involved in AI model development cannot conduct audits.

✅ External governance specialists may be engaged for high-risk AI applications.

🔹 Documentation & Reporting

✅ AI audits must produce detailed governance reports, outlining security risks, compliance gaps, and mitigation strategies.

✅ Findings must be presented to compliance officers, risk teams, and executive leadership.

🚀 Best Practice: Internal AI audits should proactively identify compliance failures, rather than waiting for regulators to expose gaps.

Why Internal AIMS Audits Are Critical

Internal audits are the first line of defence against AI compliance violations, adversarial threats, and bias failures.

Key Benefits

✅ Early Identification of AI Risks

- Prevents legal exposure from biassed AI decisions and regulatory non-compliance.

- Reduces financial liabilities linked to flawed AI-driven outcomes.

✅ Security & Adversarial Risk Prevention

- Detects data poisoning, model inversion attacks, and adversarial manipulation before deployment.

- Validates encryption, access control, and AI model integrity.

✅ Bias & Fairness Audits

- Ensures AI systems comply with anti-discrimination laws (GDPR, AI Act, ISO 42001).

- Detects hidden biases in AI-driven hiring, credit scoring, and legal risk assessment models.

✅ Regulatory Compliance Alignment

- Demonstrates adherence to ISO 42001, GDPR, NIST AI RMF, and other AI governance frameworks.

- Establishes a defensible audit trail to mitigate penalties.

🚀 Best Practice: Regulatory bodies are increasing AI scrutiny—proactive audits minimise legal risks and enhance AI reliability.

AI Audit Checklist (ISO 42001 Compliance)

To maintain AI governance integrity, organisations must implement a structured audit framework.

Step 1: Define the AI Audit Scope (ISO 42001 Clause 4.3)

✅ Identify AI models, datasets, and decision pipelines under governance.

✅ Establish audit parameters (bias detection, security, compliance, explainability).

Step 2: Develop an AI Audit Plan (ISO 42001 Clause 9.2.2)

✅ Define audit frequency based on AI risk classification.

✅ Assign independent auditors with no direct control over AI model development.

Step 3: Conduct AI Risk & Governance Assessments (ISO 42001 Clause 9.2.3)

✅ Evaluate AI bias mitigation frameworks and fairness testing outcomes.

✅ Assess AI security defences against adversarial threats.

✅ Validate AI explainability mechanisms to ensure compliance with ISO 42001 transparency mandates.

Step 4: Document & Report Audit Findings (ISO 42001 Clause 10.1)

✅ Identify AI governance failures and compliance gaps.

✅ Recommend corrective actions to enhance AI security and transparency.

✅ Deliver audit reports to executive stakeholders for risk management decisions.

🚀 Best Practice: Continuous AI audit tracking ensures that risk mitigation strategies remain effective over time.

AI Audit Reporting & Risk Mitigation

AI audit reports must deliver precise risk assessments and actionable governance improvements.

Key Components of an Effective AI Audit Report

🔹 Identified AI Governance Weaknesses

✅ Security vulnerabilities, model fairness issues, and compliance gaps.

🔹 AI Risk Treatment Recommendations

✅ Bias mitigation strategies (recalibrating training data, retraining models with diverse datasets).

✅ Adversarial defence mechanisms (enhanced authentication, adversarial testing, differential privacy).

✅ Regulatory compliance improvements (aligning AI governance policies with ISO 42001 and AI Act).

🚀 Best Practice: AI audit reports must be reviewed by legal teams, risk officers, and compliance executives to ensure governance frameworks remain effective.

Common AI Audit Failures & Corrective Actions

Internal audits often reveal systemic AI governance failures that, if unaddressed, expose organisations to regulatory action and legal risks.

| AI Audit Non-Conformity | Potential Risk | Recommended Fix |

| Lack of AI Explainability | Violates GDPR & AI Act transparency mandates | Implement explainable AI (XAI) techniques |

| Failure to Detect Bias | Triggers legal liability & discrimination lawsuits | Conduct routine bias audits |

| Weak AI Security Defences | AI models vulnerable to adversarial ML attacks | Strengthen security policies & monitoring |

| Regulatory Non-Compliance | Results in hefty GDPR & AI Act fines | Enforce automated AI compliance tracking |

🚀 Best Practice: AI audits must be ongoing, not reactive—organisations should continuously monitor compliance risks rather than scrambling after a regulatory breach.

Checklist for Internal AI Audits (ISO 42001 Compliance)

✅ Develop an AI audit plan and schedule periodic AI risk assessments.

✅ Ensure AI models meet transparency, fairness, and security compliance requirements.

✅ Document AI governance non-conformities and implement corrective actions.

✅ Report AI audit findings to compliance teams and executives for governance improvements.

✅ Establish AI risk monitoring tools to detect governance failures in real time.

You wouldn't let untested AI make decisions that could bankrupt you. So why are you afraid of an audit proving it works

- Sam Peters, ISMS.Online CPO

Conducting Internal AI Audits

AI governance is only as strong as its weakest link. A well-executed internal audit ensures that AI systems remain compliant, unbiased, and resilient against adversarial threats. Without rigorous internal evaluations, organisations risk regulatory penalties, security breaches, and flawed decision-making models.

ISO 42001:2023 Clause 9.2 mandates structured internal audits, ensuring AI governance frameworks are robust, explainable, and legally defensible before external scrutiny exposes vulnerabilities.

1. Defining the Scope of an Internal AI Audit (ISO 42001 Clause 9.2.2)

An effective AI audit begins with a clear definition of scope—what models, datasets, and decision-making processes fall under review? Without precise boundaries, governance blind spots emerge, leaving organisations exposed to compliance failures and operational risks.

Key Scope Considerations

✅ Identify which AI models, datasets, and decision pipelines will be audited.

✅ Establish governance scope based on regulatory requirements (GDPR, AI Act, NIST AI RMF, ISO 27701).

✅ Define risk categories:

- AI Security – Assess resilience against adversarial attacks, data poisoning, and unauthorised access.

- Bias Mitigation – Evaluate whether AI models exhibit discriminatory or unfair decision-making patterns.

- Explainability & Accountability – Ensure model transparency and traceability in automated decisions.

🚀 Best Practice: Develop a comprehensive AI audit checklist incorporating ISO 42001 Annex A controls and distribute responsibilities across governance teams.

2. Creating an Internal AI Audit Programme (ISO 42001 Clause 9.2.3)

A structured audit programme ensures AI compliance remains a continuous process, not a reactive measure following a regulatory fine or public scandal.

Building an AI Audit Framework

✅ Define audit frequency – Annually, bi-annually, or continuous real-time monitoring.

✅ Establish roles and responsibilities for AI governance auditors—ensuring no conflicts of interest with AI developers or data scientists.

✅ Set audit objectives, focusing on:

- Evaluating bias detection and mitigation measures.

- Ensuring adversarial robustness and cybersecurity protections.

- Verifying decision traceability and AI accountability mechanisms.

🚀 Best Practice: Deploy automated AI compliance monitoring tools to detect governance failures before they escalate.

3. Gathering AI Compliance Evidence (ISO 42001 Clause 9.2.4)

Audit findings are only as strong as the supporting evidence. AI governance teams must systematically document risk assessments, security policies, and fairness audits to substantiate compliance claims.

Key AI Governance Documents for Audits

📌 AI Governance Scope Statement – Defines AI systems, decision workflows, and risk categorizations under review.

📌 Statement of Applicability (SoA) – Specifies ISO 42001 controls applied, including security, fairness, and explainability.

📌 Bias & Risk Assessments – Ensures AI models comply with fairness, transparency, and non-discrimination mandates.

📌 AI Security Policies – Outlines protections against adversarial exploits, model inversion, and data manipulation.

📌 Incident Response Plans – Defines AI failure deactivation procedures and risk remediation actions.

🚀 Best Practice: Use a structured AI audit template with four core categories: | Clause | ISO 42001 Requirement | Compliant? (Yes/No) | Supporting Evidence |

4. Executing the Internal AI Audit (ISO 42001 Clause 9.2.5)

A well-orchestrated audit assesses AI security, fairness, and compliance through technical evaluations, forensic testing, and governance interviews.

Essential Audit Tasks

✅ Conduct bias testing on AI models—identifying unintended discriminatory behaviour in decision outputs.

✅ Perform adversarial ML security tests, including simulated data poisoning, model evasion, and API abuse scenarios.

✅ Evaluate AI explainability mechanisms, ensuring decision logic remains interpretable to auditors and stakeholders.

✅ Review data governance compliance to validate AI data processing aligns with GDPR, AI Act, and ISO 27701 requirements.

🚀 Best Practice: Ensure audit independence—AI auditors must not be directly involved in AI development, deployment, or data curation.

5. Documenting AI Audit Findings (ISO 42001 Clause 9.2.6)

The value of an AI audit hinges on how well its findings translate into actionable governance improvements.

Critical Audit Report Components

✅ Summarise audit scope, objectives, and AI models reviewed.

✅ Identify non-conformities, including bias risks, security gaps, and compliance failures.

✅ Recommend corrective actions to close AI governance loopholes.

✅ Develop an AI risk remediation plan, including timelines and responsible governance teams.

🚀 Best Practice: Present audit findings to executives, legal teams, and compliance officers, ensuring accountability in governance improvements.

6. AI Management Review & Compliance Oversight (ISO 42001 Clause 9.3)

Post-audit governance reviews ensure that AI compliance strategies evolve with emerging threats, regulatory changes, and technological advancements.

AI Governance Review Focus Areas

✅ Assess AI risk levels and compliance gaps based on audit findings.

✅ Allocate resources for AI governance enhancements and security risk mitigation.

✅ Update AI compliance documentation and governance policies.

✅ Develop an AI risk mitigation roadmap with structured implementation timelines.

🚀 Best Practice: Schedule quarterly AI governance reviews to proactively monitor compliance risks and AI security trends.

7. Handling AI Non-Conformities & Corrective Actions (ISO 42001 Clause 10.1)

AI audits often expose governance failures that, if ignored, result in regulatory non-compliance, legal liability, and reputational damage.

Managing AI Non-Conformities

✅ Classify AI governance failures by severity:

- Minor Issues – Require adjustments to AI governance frameworks.

- Major Issues – Pose significant compliance risks requiring immediate intervention.