Understanding the Significance of ISO 42001

ISO/IEC 42001:2023 is a pivotal standard for organisations utilising artificial intelligence (AI), serving as a comprehensive AI Management System Standard (AIMS). This standard is crucial for ensuring AI is developed, deployed, and managed ethically, securely, and transparently, aligning with global regulations such as the General Data Protection Regulation (GDPR), the EU AI Act, and the Executive Order on AI issued by President Biden (Requirement 1, 4.1, Annex D). These alignments ensure that organisations are well-prepared to meet international compliance requirements and ethical expectations.

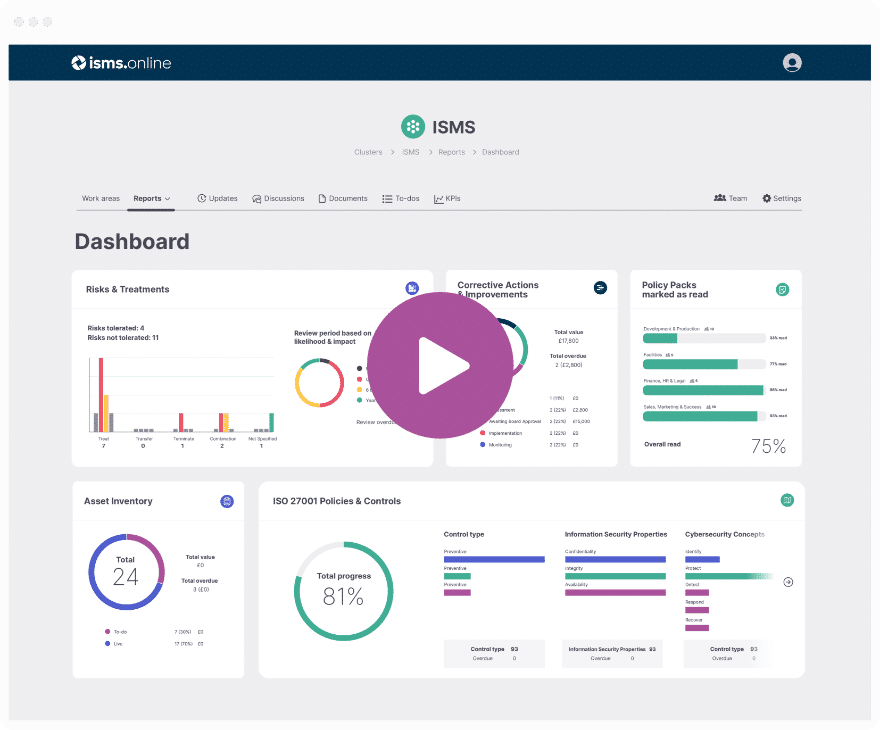

The Role of ISMS.online in Achieving ISO 42001 Compliance

ISMS.online offers a robust platform that simplifies the journey to ISO 42001 compliance. It provides a structured environment to document the AI management system, ensuring all aspects of ISO 42001 are addressed, from risk management to stakeholder engagement (Requirement 4.4). The platform’s features align with Annex A of ISO 42001, offering reference control objectives and controls that organisations can adapt to their specific needs, ensuring a tailored approach to compliance.

Annex A Controls and Their Application

Annex A of ISO 42001 provides detailed guidance on implementing AI system controls. ISMS.online facilitates the application of these controls, ensuring data quality and thorough impact assessments are conducted (A.7, A.5). By leveraging the platform's capabilities, organisations can systematically manage AI risks, document incidents, and maintain a high level of governance, which is essential for building trust and transparency in AI systems (Annex B).

Book a demoCore Requirements of ISO 42001 for AI Management Systems

ISO 42001 establishes a comprehensive framework for AI management systems, ensuring responsible development, deployment, and use of AI technologies. The standard advocates for a lifecycle approach, addressing AI from conceptualisation to operation, and underscores the importance of ethical considerations such as transparency (Requirement 3.11) and accountability (Requirement 3.22) throughout the AI system’s lifecycle.

Ensuring Responsible AI Use

Organisations are required by ISO 42001 to conduct thorough risk (Requirement 3.7) and impact assessments (A.5.2), as detailed in Annex A.5 and Annex B.5. These assessments are crucial in evaluating the potential consequences of AI systems on individuals and society, ensuring that AI technologies are used ethically and securely, with a commitment to fairness (C.2.5), privacy (C.2.7), and transparency (C.2.11).

Systematic Approach to AI Governance

ISO 42001 proposes a systematic approach to AI governance, which includes establishing clear governance structures and defining roles and responsibilities for AI system management (Requirement 5.3). This approach is supported by detailed guidance in Annex A, which provides reference control objectives and controls for AI systems, ensuring that roles and responsibilities are clearly defined and allocated (A.3.2) and that a process is in place to report concerns about the organisation’s role with respect to an AI system throughout its life cycle (A.3.3).

Fostering Innovation and Competitive Advantage

Adherence to ISO 42001 enables organisations to foster innovation and secure a competitive advantage. The standard promotes a balance between innovation and risk management, allowing organisations to leverage AI technologies while maintaining ethical and secure practices. Compliance with ISO 42001 can also enhance stakeholder trust and market differentiation, further contributing to an organisation’s competitive edge, by addressing risks and opportunities (Requirement 6.1) and considering environmental impacts (C.2.4), robustness (C.2.8), and security (C.2.10). Integration with other management system standards (D.2) further strengthens this competitive advantage.

Get an 81% headstart

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

Harmonising ISO 42001 with Other ISO Standards

ISO 42001 is crafted to seamlessly integrate with existing management system standards, providing a unified framework for organisations. This harmonisation is particularly fluid with ISO 27001, ISO 27701, and ISO 9001, which govern information security, privacy information, and quality management respectively.

Benefits of Integrated Management Systems

When ISO 42001 is integrated with these standards, organisations benefit from a fortified approach to managing AI systems, which includes:

- Bolstering overall governance and risk management practices, as outlined in D.2, ensuring accountability (C.2.1) and security (C.2.10).

- Advancing data protection and privacy measures, in line with A.7.4 and B.7.4, upholding privacy as a core value (C.2.7).

- Enhancing quality management processes throughout AI system lifecycles, as per A.6 and B.6, and maintaining system integrity (C.2.6).

Leveraging ISMS.online for Strategic Audit Consolidation

ISMS.online aligns with ISO 42001’s requirements, offering a strategic platform for audit consolidation, featuring:

- A centralised system for managing compliance documentation, crucial as per Requirement 7.5.

- Tools for risk assessment and treatment that align with A.5.3 and B.5.3, ensuring comprehensive risk management.

- Integrated audit and review capabilities, essential for maintaining compliance with multiple standards, as mandated by Requirement 9.2 and supported by B.9.2.

Navigating Challenges in Standard Integration

Organisations integrating multiple standards may face challenges such as:

- Ensuring consistency in documentation and processes across different standards, a necessity as per Requirement 7.5.3.

- Managing the complexity of integrating various management systems, a consideration highlighted in D.1.

Organisations can use ISMS.online’s structured approach and customisable features to ensure a streamlined and consistent application of all integrated standards, effectively addressing these challenges.

Risk Management Strategies Under ISO 42001

ISO 42001 outlines a structured approach for organisations to manage AI-related risks, emphasising the establishment of a robust risk management framework. This framework is designed to ensure AI systems are developed and used in compliance with legal and ethical standards, including global regulations like the General Data Protection Regulation (GDPR) and the European Union’s AI Act.

Aligning with Global Regulations

Organisations achieve alignment with global regulations by:

-

Risk Identification: Identifying potential AI risks that could impact privacy, security, and ethical standards is crucial. This involves recognising risks that may affect the organisation’s compliance with global regulatory requirements, such as GDPR and the European Union’s AI Act (Requirement 5.2, Requirement 5.3). Organisations should document data acquisition and quality as per Annex A Controls A.7.3 and A.7.4, guided by Annex B Implementation Guidance B.7.3 and B.7.4. Privacy and security are organisational objectives that must be considered (Annex C.2.7, C.2.10), and the AI management system should be adaptable across various domains or sectors as outlined in Annex D.1.

-

Risk Evaluation: Organisations must assess the severity and likelihood of identified risks in the context of global regulatory requirements (Requirement 5.3). This includes evaluating AI risks through impact assessment processes (Annex A Control A.5.2) and following the guidance provided in Annex B.5.2. It’s essential to consider risk sources related to machine learning (Annex C.3.4) to ensure comprehensive risk evaluation.

-

Risk Mitigation: To mitigate identified risks, organisations implement controls detailed in Annex A, particularly focusing on system verification and validation (Annex A Control A.6.2.4) as advised by Annex B Implementation Guidance B.6.2.4. Safety is a key organisational objective that must be addressed during risk mitigation (Annex C.2.9).

Role of Risk/Impact Assessments in AI Lifecycle

Risk and impact assessments are integral throughout the AI lifecycle, enabling organisations to:

-

Proactive Risk Management: Anticipate and address risks before they materialise by conducting assessments at each stage of the AI lifecycle (Requirement 5.6). This proactive approach is supported by the AI system impact assessment process (Annex A Control A.5.2) and the corresponding implementation guidance (Annex B.5.2). System life cycle issues must be considered as potential risk sources (Annex C.3.6).

-

Continuous Monitoring: Organisations must regularly review and update risk assessments to adapt to changes in the AI environment or regulatory landscape (Requirement 9.1). This involves monitoring AI system operation (Annex A Control A.6.2.6) and adhering to the operational guidance provided in Annex B.6.2.6. Technology readiness is a risk source that requires continuous monitoring (Annex C.3.7).

Effective Implementation of Risk Management Strategies

For effective implementation of risk management strategies, organisations should:

-

Leverage Annex A Controls: Apply appropriate controls for AI risk treatment as guided by Annex A, focusing on system verification and validation (Annex A Control A.6.2.4) and system deployment (Annex A Control A.6.2.5), with implementation guidance from Annex B.6.2.4 and B.6.2.5 (Requirement 5.5).

-

Customise to Context: Tailor risk management processes to the organisation’s specific context, considering the nature of the AI systems and the regulatory environment. This involves understanding the organisation and its context (Requirement 4.1), as well as the needs and expectations of interested parties (Requirement 4.2). Resource documentation is crucial in this process (Annex A Control A.4.2) and should be guided by Annex B.4.2. The AI management system should be integrated with other management system standards as appropriate (Annex D.2).

-

Engage Stakeholders: Ensure comprehensive understanding and commitment by involving all relevant stakeholders in the risk management process. This includes defining roles, responsibilities, and authorities (Requirement 5.3), reporting concerns (Annex A Control A.3.3), and following the reporting guidance (Annex B.3.3). Robustness is an organisational objective that benefits from stakeholder engagement (Annex C.2.8).

Compliance doesn't have to be complicated.

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

Key Stakeholder Roles in ISO 42001 Compliance

Defining Stakeholder Responsibilities

Developers, integral to ISO 42001 compliance, must integrate ethical considerations into AI design, aligning with Requirement 5.2 and Annex A Control A.5.3. They adhere to risk management processes, ensuring objectives guide responsible development as per Annex B.5.5. Users, on the other hand, are tasked with operating AI systems within set parameters, maintaining compliance with Requirement 8.1 and ensuring the system’s intended use aligns with Annex A Control A.9.4. Regulators provide essential oversight, ensuring legal and ethical standards are met, a responsibility outlined in Requirement 4.2.

Enhancing Ethical AI Practices through Engagement

Stakeholder engagement is crucial for ethical AI, requiring clear communication, inclusive participation, and regular consultation. These practices are supported by Annex B.8.2 and Annex C.2.11, ensuring AI systems are understandable and stakeholders are well-informed. Regular stakeholder input, as per Annex B.5.2, is essential throughout the AI system lifecycle.

Tools for Effective Stakeholder Engagement

To foster a culture of responsibility and accountability, organisations can utilise collaborative platforms like ISMS.online, which align with Annex A Control A.4.2 for centralised communication and documentation. Risk assessment tools, in line with Annex A and focusing on data quality as per Annex B.7.4, collaboratively manage AI-related risks. Training programmes are vital, emphasising the importance of compliance and aligning with Annex B.7.2 to educate stakeholders on their roles.

Annex D – Use of the AI management system across domains or sectors

Applying ISO 42001’s AI management system across various domains ensures compliance and ethical AI practices. This application is supported by Annex D.2, which encourages organisations to harmonise their AI management practices with other relevant standards for a comprehensive approach to AI governance.

Steps to ISO 42001 Compliance

Achieving compliance with ISO 42001 involves a series of strategic steps, beginning with a comprehensive understanding of the organisation’s context and the scope of its AI management system, as outlined in Requirement 4.1 and Requirement 4.3. This is followed by the establishment of an AI policy, as per Requirement 5.2, the assignment of roles and responsibilities in line with Requirement 5.3, and the implementation of a risk management process, which includes proactive risk assessment and treatment detailed in A.5.5.

Preparing for Regulatory Benchmarks

Organisations should anticipate potential future regulations by aligning AI management practices with the standard’s requirements, including understanding the organisation and its context (Requirement 4.1) and determining the scope of the AI management system (Requirement 4.3). Engaging in proactive risk assessment and treatment is crucial, as detailed in A.5.5, and ensuring AI system impact assessments are conducted in accordance with A.5.

Continuous Improvement Processes

ISO 42001 advocates for continuous improvement, recommending regular monitoring and evaluation of the AI management system’s performance (Requirement 9.1). Taking corrective actions in response to nonconformities, as guided by A.10.2, and updating the AI management system based on feedback and performance data (Requirement 10.1) are essential for maintaining compliance.

Support from ISMS.online

ISMS.online aids in maintaining ongoing compliance by providing a centralised platform for managing documentation and evidence of compliance (Requirement 7.5). Tools for risk management and impact assessment align with Annex A controls, including A.6.2.4 for AI system verification and validation and A.7.4 for the quality of data for AI systems. Features that support the continuous improvement cycle and preparation for external audits are also provided, as per 9.2 and 10.2.

Manage all your compliance in one place

ISMS.online supports over 100 standards

and regulations, giving you a single

platform for all your compliance needs.

Advantages of ISO 42001 Compliance for Organisations

Risk Mitigation and Competitive Advantage

Organisations that comply with ISO 42001 proactively address AI-related risks, crucial for safeguarding against liabilities and ensuring operational integrity. The standard’s risk management framework, particularly the systematic approach provided by Annex A controls, is instrumental in enhancing competitive advantage. By implementing Requirement 5.2, organisations emphasise the importance of mitigating AI-related risks. The A.5.3 control ensures responsible development of AI systems, while B.4.2 guides the systematic documentation of resources, further solidifying risk management practices.

Enhancing Stakeholder Trust

Adherence to ISO 42001 fosters trust, a fundamental aspect of customer and stakeholder relationships. By establishing an AI policy as per Requirement 5.2, organisations commit to responsible AI practices, thereby boosting stakeholder confidence. The standard’s focus on transparency and accountability, as advised in A.8.2 and supported by B.8.2, ensures that AI system documentation and controls are transparent, bolstering stakeholder trust.

Alignment with Organisational Goals

The adaptable framework of ISO 42001 allows for the alignment of AI management practices with an organisation’s broader business objectives and strategies. By understanding the organisation and its context (Requirement 4.1), organisations ensure that AI systems contribute to achieving their goals while adhering to ethical and regulatory requirements. The potential AI-related organisational objectives outlined in C.2 support this alignment, and the guidance on integrating the AI management system with other management system standards (D.2) further enhances the adaptability of the framework.

Further Reading

Ensuring Ethical AI Through ISO 42001

ISO 42001 establishes a framework that prioritises trust, transparency, and accountability throughout the AI lifecycle, advocating for systems that are technically sound and socially responsible (Requirement 4.1). It is essential for organisations to develop an AI policy that reflects their commitment to ethical AI use, which should be compatible with the strategic direction and the context of the organisation (Requirement 5.2).

Mitigating Bias and Upholding Ethics

To mitigate bias and uphold ethics in AI, ISO 42001 recommends implementing diverse and inclusive training datasets to minimise algorithmic bias (A.7.3), and conducting regular reviews of AI systems to assess and address emergent biases or ethical issues (A.7.4). These reviews should be informed by the organisation’s AI policy and objectives, ensuring that fairness is maintained throughout the AI system’s lifecycle (C.2.5, C.3.4).

Documentation and Incident Management

Robust documentation and incident management processes are crucial for maintaining detailed records of AI system operations and incidents, ensuring traceability and accountability (Requirement 7.5). Organisations should implement clear procedures for managing customer expectations and reporting incidents, which align with the requirements for AI system documentation (A.8.3, A.8.4). This includes external reporting mechanisms and communication plans for incidents, which are essential for maintaining transparency with stakeholders (B.8.3, B.8.4).

The Role of Human Oversight

Human oversight plays a vital role in ISO 42001’s approach to ethical AI practices. The standard emphasises the need for human-in-the-loop systems to ensure AI decisions are reviewable and reversible (Requirement 5.3). It is important to assign responsibility to individuals or teams for monitoring AI systems and intervening when necessary, which is outlined in the governance controls of Annex A (A.6.2.6). This ensures that accountability is maintained and that AI systems operate within the established ethical framework (C.2.1, C.3.3).

Navigating the ISO 42001 Certification Process

Understanding Certification Validity and Supervision

Upon successful certification, the ISO 42001 certificate typically holds a validity of three years. During this period, organisations are subject to annual supervision to ensure continued adherence to the standard’s practices. This ongoing supervision, as mandated by Requirement 9.2, is crucial for maintaining the integrity and effectiveness of the AI management system, fostering Requirement 10.1‘s goal of continual improvement.

Anticipating Certification Bodies in 2024

With certification bodies expected to be operational by 2024, organisations should stay informed about the specific expectations and requirements these bodies will set forth. It is anticipated that these entities will play a pivotal role in standardising the certification process and ensuring uniformity in compliance assessments, as highlighted in Annex D, which discusses the application of the AI management system across various domains and sectors.

Preparing for External Audit and Certification

To prepare for external audit and certification, organisations should:

- Conduct internal audits and gap assessments against ISO 42001 requirements, particularly focusing on the controls detailed in Annex A.

- Implement necessary corrective actions to address any identified deficiencies, in line with Requirement 10.2.

- Ensure all relevant documentation is up-to-date and readily available for review by the auditors, adhering to Requirement 7.5.

- Engage in continuous improvement practices to align with the standard’s dynamic nature and evolving guidelines, as supported by the implementation guidance provided in Annex B.

Aligning ISO 42001 with Global Standards

ISO 42001 is designed to harmonise with the ISO 9000 family of standards, providing a unified approach to quality management and AI system governance. This alignment ensures that organisations can integrate AI management with established quality processes, enhancing overall operational excellence Requirement (4.4). By establishing an AI policy Requirement (5.2) that is compatible with the strategic direction of the organisation, and by planning Requirement (6) to address the risks and opportunities associated with AI systems, organisations can ensure continual improvement Requirement (10) in line with global standards.

Tools for Compliance Tracking

For compliance tracking, organisations have access to tools like ISMS.online, which offer centralised management of compliance activities, streamlined tracking of adherence to ISO 42001 and other standards, and simplified audit preparation through organised documentation and evidence management. These tools are instrumental in monitoring, measurement, analysis, and evaluation Requirement (9.1), as well as in conducting internal audits Requirement (9.2). They also support data preparation (A.7.6) and the acquisition of data (B.7.3), ensuring that AI systems are developed with quality and compliance in mind.

Supporting Sustainable Development Goals

ISO 42001 compliance aids organisations in contributing to the Sustainable Development Goals (SDGs) by promoting ethical AI practices that align with global sustainability efforts and encouraging responsible innovation that considers social and environmental impacts. Actions to address risks and opportunities Requirement (6.1) are central to this effort, as are considerations of environmental impact (C.2.4) and fairness (C.2.5), ensuring that AI technologies contribute positively to society and the planet.

Addressing Challenges in Standard Alignment

Organisations may encounter challenges in aligning with global standards due to variations in regional regulations and compliance requirements, as well as the complexity of integrating AI management systems with other business processes. To address these challenges, organisations can leverage the flexibility of ISO 42001, which allows for customisation of the AI management system to fit specific needs and contexts, as suggested by Annex A’s control objectives and controls.

Understanding the organisation and its context Requirement (4.1), understanding the needs and expectations of interested parties Requirement (4.2), and determining the scope of the AI management system Requirement (4.3) are all critical steps in this process. Allocating responsibilities (B.10.2) effectively across the organisation ensures that the AI management system is well-integrated and aligned with the organisation’s overall goals and strategies, as well as with the diverse domains and sectors outlined in Annex D (D.1).

Sector-Specific Applications of ISO 42001

Adapting ISO 42001 to Different Industries

Organisations in healthcare, defence, transport, and finance are tailoring the ISO 42001 framework to their operational needs by:

- Identifying AI risks and opportunities specific to their sectors, guided by Annex A controls such as A.7.2 for data development, A.7.3 for data acquisition, and A.7.4 for data quality.

- Developing AI policies that address unique challenges and comply with regulatory requirements, in line with A.2.2, and ensuring these policies are reviewed as per A.2.4.

- Conducting AI system impact assessments that reflect sector-specific nuances, following the process outlined in B.5.2 and documenting the assessments according to B.5.3.

Leveraging ISMS.online for Compliance in Diverse Sectors

ISMS.online facilitates sector-specific ISO 42001 compliance by:

- Offering a control library that aligns with Annex A, adaptable to the diverse needs of different sectors.

- Providing customizable templates and workflows for documenting AI management processes, as recommended in Annex A.7.

- Integrating tools for risk assessment and treatment to ensure AI applications meet ISO 42001 standards, leveraging guidance from Annex B, particularly B.7.2 to B.7.6.

The adaptability of ISO 42001 across various sectors is further supported by Annex D, which guides the integration of the AI management system with other management systems relevant to specific industries, such as ISO 27001 for information security, ISO 27701 for privacy, or ISO 9001 for quality management. This integration ensures a comprehensive approach to AI governance and management within each sector’s unique context.

Achieving ISO 42001 Compliance with ISMS.online

ISMS.online equips organisations with the tools and resources necessary for navigating the complexities of ISO 42001 compliance. The platform’s structured environment is conducive to managing the intricate requirements of AI management systems.

Implementing Controls and Guidance with ISMS.online

Your organisation can utilise ISMS.online to:

- Implement the reference control objectives and controls provided in ISO 42001 Annex A, specifically A.2.2 for documenting an AI policy.

- Access implementation guidance to effectively apply these controls within your specific context, as detailed in B.2.2, ensuring your AI policy is informed by business strategy and risk environment.

Leveraging ISMS.online for Continuous Improvement

ISMS.online facilitates continuous improvement by offering:

- A framework for addressing nonconformities and taking corrective actions, as per Requirement 10.2, ensuring that nonconformities are effectively managed and corrected.

- Tools for setting and tracking improvement objectives, aligning with C.2.10 and C.2.11 to manage security and transparency objectives effectively.

Choosing ISMS.online for ISO 42001 Compliance

Selecting ISMS.online for your ISO 42001 compliance journey ensures:

- A centralised platform for managing all aspects of AI system governance and documentation, supporting the Requirements for establishing, implementing, maintaining, and continually improving an AI management system.

- Support for the entire lifecycle of AI systems, from concept to deployment and operation, as encouraged by D.2, facilitating the integration of the AI management system with other management systems.

- A systematic approach to risk management, aligning with global standards and regulations, and facilitating the integration of the AI management system with other management systems as outlined in D.2.