ISO/IEC 42001 Certification Explained

ISO/IEC 42001:2023 establishes a global benchmark for Artificial Intelligence Management Systems (AIMS), offering a structured framework to guide organisations in the ethical development and use of AI. This standard encapsulates core principles such as ethical AI (Requirement 3.5), transparency (Requirement 3.10), accountability (Requirement 3.22), privacy (Requirement 3.23), security (Requirement 3.23), and bias mitigation, ensuring AI technologies are developed and managed responsibly (Requirement 3.7, Requirement 3.11, Requirement 3.13).

Embarking on the ISO 42001 certification journey, organisations must first grasp the standard’s requirements, including Requirement 4.1, 4.2, 4.3, and 4.4, and establish a project plan. A gap analysis is pivotal, assessing current practices against the standard’s requirements, especially those in Annex A, which details control objectives and controls for AI management systems.

Benefits of ISO 42001 Certification

Achieving ISO 42001 certification signifies an organisation’s commitment to ethical AI principles, demonstrating to stakeholders that their AI systems are managed in line with internationally recognised best practices (Requirement 1, Requirement 3.15). This certification is crucial for organisations leveraging AI technologies, as it helps to build trust and confidence among users, customers, and regulators (Requirement 3.18, Requirement 3.19, Requirement 3.20, Requirement 3.21).

Building Trust and Confidence

The certification process is integral to building trust and confidence, as it involves rigorous audits (Requirement 9.2) and continual improvement (Requirement 10.1), ensuring that AI systems are managed responsibly and effectively.

Integration with Other Management Systems

ISO/IEC 42001:2023 can be integrated with other management system standards, such as ISO/IEC 27001 for information security and ISO 9001 for quality management, demonstrating the standard’s compatibility with existing organisational processes and systems (Annex D).

Addressing Ethical AI, Transparency, and Accountability

ISO 42001 mandates that AI systems be developed with a focus on fairness and without bias, addressing ethical AI (Annex C.2.5). Transparency is achieved through clear documentation and open communication regarding AI system functionalities and governance (Annex C.2.11). Accountability is ensured by establishing roles and responsibilities throughout the AI system’s lifecycle, as outlined in Annex A Controls of the standard (Annex A Control A.3.2).

Accountability Throughout the Lifecycle

Accountability is maintained throughout the AI system’s lifecycle, with roles and responsibilities clearly defined and communicated within the organisation (Requirement 5.3). This includes ensuring competence (Requirement 7.2) and awareness (Requirement 7.3) among personnel involved in AI-related processes.

Enhancing Reputation and Competitive Edge

Adherence to ISO 42001 enhances an organisation's reputation and competitive edge by demonstrating a commitment to managing AI systems in a responsible and ethical manner, in line with legal and regulatory requirements (Requirement 4.1, Requirement 4.2, Requirement 4.3).

Book a demoThe Certification Process

Compliance with ISO 42001 demonstrates an organisation’s dedication to ethical standards and risk management in AI applications (Requirement 1). It also facilitates adherence to legal and regulatory requirements.

Scope and Applicability

Applicable to any organisation, regardless of size, type, or nature, ISO/IEC 42001:2023 provides guidance for those providing or using AI systems (Requirement 1). It is relevant across various industries and sectors, emphasising the standard’s broad applicability and the importance of aligning with international best practices (Annex D).

The Role of Top Management

Top management plays a critical role in demonstrating leadership and commitment to the AI management system (Requirement 5.1). They are responsible for ensuring that the AI policy and objectives are established and compatible with the strategic direction of the organisation (Requirement 5.2).

Risk Management Process

The AI risk assessment and treatment process is detailed, with organisations required to identify, analyse, evaluate, and treat AI risks to ensure the AI management system achieves its intended outcomes (Requirement 5.2, Requirement 5.3, Requirement 5.5).

AI System Impact Assessment

Organisations must assess the potential consequences of AI systems on individuals, groups, and society, documenting the results as part of the AI system impact assessment process (Requirement 5.6, Annex A Control A.5).

Documented Information

Creating, maintaining, and controlling documented information is necessary for ensuring the availability and suitability of information where and when it is needed, and its protection from loss of confidentiality or integrity (Requirement 7.5).

Continual Improvement

Continual improvement is emphasised as a key aspect of the AI management system, with organisations engaging in recurring activities to enhance performance (Requirement 10.1).

Conducting an Internal Audit

A critical step in the certification process is conducting an internal audit, as per Requirement 9.2, evaluating the AI management system’s effectiveness against ISO 42001 standards. This audit, which includes a review of the AI policy as per B.2.4 and reporting of concerns in line with A.3.3, serves as a preparatory stage for the external audit by pinpointing areas of non-conformity and opportunities for improvement.

Certification Audit by an Accredited Third-Party

A certification audit by an accredited third-party, as outlined in Requirement 5.16 and 9.2.2, offers an impartial assessment of the AI management system’s conformance to ISO 42001. This external validation, which can be further informed by Annex D‘s guidance on the use of the AI management system across domains or sectors, enhances credibility, assures stakeholders of the organisation’s commitment to responsible AI practices.

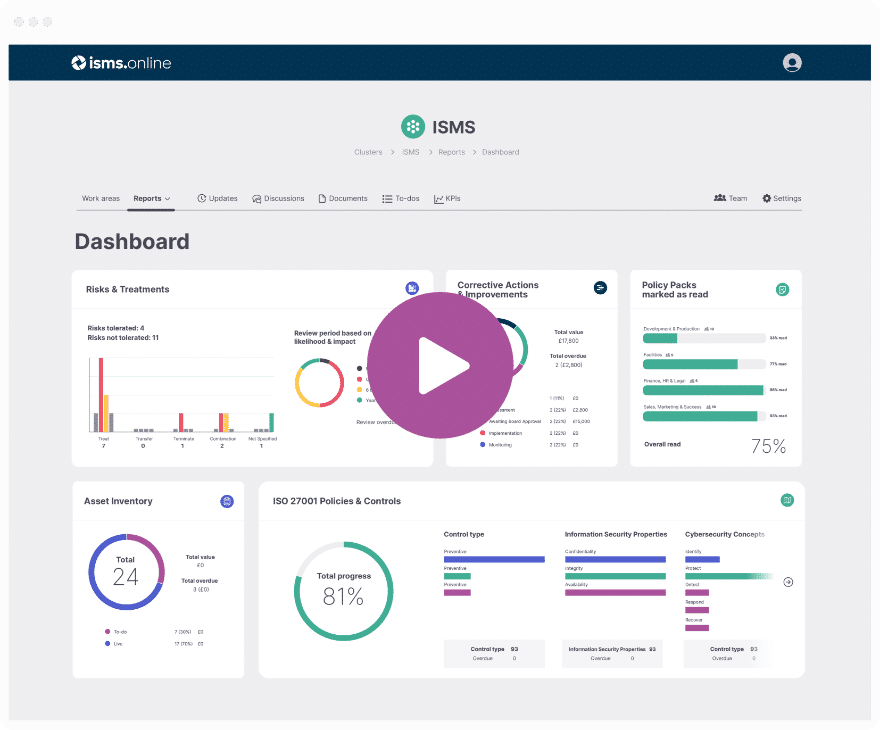

Get an 81% headstart

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

Establishing an AI Policy

Establishing an AI policy is a foundational requirement for ISO 42001 certification, forming the cornerstone of an organisation’s AI governance framework. This policy ensures that AI systems are developed and used in an ethical, transparent, and accountable manner, reflecting the organisation’s commitment to privacy, security, and bias mitigation in line with Requirement 5.2. The AI policy must be documented as per A.2.2, and should be informed by business strategy, organisational values, and the level of risk the organisation is willing to pursue or retain, as suggested in B.2.2. Regular reviews and updates to the AI policy are important to ensure its continuing suitability, adequacy, and effectiveness, aligning with B.2.4.

Identifying and Addressing Risks and Opportunities

Identifying and addressing risks and opportunities for AI systems is a dynamic process that necessitates a thorough understanding of the AI system’s lifecycle. Organisations are required to conduct regular risk assessments to evaluate potential adverse impacts and identify opportunities for enhancing AI system performance and trustworthiness, as stipulated in Requirement 6.1 and A.5.3. Setting objectives that affect the AI system design and development processes is guided by B.5.3, ensuring that risks are managed effectively throughout the AI system’s lifecycle.

The Critical Role of AI System Impact Assessments

AI system impact assessments are critical for ISO 42001 compliance, providing insights into the potential consequences of AI systems on individuals and society. These assessments are essential for organisations to anticipate and mitigate negative outcomes, ensuring responsible deployment and operation of AI technologies, as required by Requirement 5.6 and A.5.2. The process should consider the legal position or life opportunities of individuals, as well as universal human rights, as included in the implementation guidance of B.5.2.

Implementing Controls for AI System Lifecycle Management

Effective lifecycle management of AI systems is achieved through the implementation of robust controls detailed in Annex A. These controls govern the various stages of the AI system’s lifecycle, from design and development to deployment and decommissioning, ensuring adherence to the established AI policy and management system requirements. Organisations must plan, implement, and control the processes needed to meet AI management system requirements, as outlined in Requirement 8.1. Defining the criteria and requirements for each stage of the AI system life cycle is a control objective under A.6.2, with implementation guidance provided in B.6.2.

Role of Anne A-D in Supporting the Certification Process

Annex A of ISO 42001 is pivotal for organisations as it outlines specific control objectives and controls that are essential for managing risks associated with AI systems. It serves as a reference point for establishing and maintaining an AI policy that aligns with ethical AI, transparency, accountability, privacy, security, and bias mitigation. The controls within A.2.2 guide the documentation of an AI policy, while A.3.2 emphasises the importance of defining AI roles and responsibilities. Additionally, A.5 and A.7 focus on assessing the impacts of AI systems and managing data for AI systems, respectively. A.8 ensures that information for interested parties of AI systems is adequately addressed.

Implementation Support from Annex B

Annex B offers valuable implementation guidance for the controls listed in Annex A. It provides organisations with practical advice on how to apply these controls within their AI management systems, ensuring that the theoretical aspects of the standard are translated into actionable steps. For instance, B.2.2 provides implementation guidance for establishing an AI policy, and B.3.2 offers advice on defining AI roles and responsibilities. B.5.2 assists with the AI system impact assessment process, while B.7.2 supports the management of data for development and enhancement of AI systems. B.8.2 focuses on the system documentation and information for users.

Objective Identification with Annex C

Annex C aids organisations in identifying AI-related objectives and potential risk sources. This annex helps to ensure that the AI management system is aligned with the organisation’s strategic direction and that it addresses both the opportunities and challenges presented by AI technologies. Objectives such as C.2.1 for accountability, C.2.4 for environmental impact, C.2.5 for fairness, C.2.7 for privacy, C.2.10 for security, and C.2.11 for transparency and explainability are necessary for a comprehensive AI management system.

Sector-Specific Applications in Annex D

Annex D explains the application of the AI management system across various sectors, demonstrating the versatility and adaptability of ISO 42001. It underscores the standard’s relevance to different domains, emphasising the importance of a tailored approach to AI management that considers sector-specific requirements and challenges. D.1 provides a general overview, while D.2 stresses the importance of integrating the AI management system with other management system standards.

Compliance doesn't have to be complicated.

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

Enhancing Trust and Competitive Advantage with ISO 42001 Certification

Achieving ISO 42001 certification significantly bolsters customer trust and confidence in AI systems. Organisations adhering to the standard’s stringent requirements, such as Requirement 4.2 and Requirement 5.2, demonstrate a commitment to ethical AI practices, which is increasingly valued in today’s technology-driven marketplace. The inclusion of Annex A Control A.7.4 ensures that data quality is at the forefront, enhancing the reliability of AI systems. By addressing Annex C.2.1, organisations underscore their accountability, further solidifying stakeholder trust. The general principles outlined in Requirement 5.2 and the comprehensive approach of Annex D.1 provide a foundation for integrating AI management systems across various domains, offering a competitive advantage in the market.

Improving Risk Management and Decision-Making

ISO 42001 certification equips organisations with a robust framework for risk management. The standard’s Annex A controls, particularly A.5.5, guide the identification, assessment, and treatment of AI-related risks, leading to more informed and strategic decision-making processes. The objectives for responsible development of AI systems, as detailed in B.5.3, along with the consideration of risk sources related to machine learning in C.3.4, ensure a comprehensive approach to AI risk management.

Facilitating Legal and Regulatory Compliance

Compliance with legal and regulatory requirements is streamlined through ISO 42001 certification. The standard’s comprehensive approach, including Requirement 4.3 and Requirement 5.3, ensures that organisations meet their obligations, reducing the risk of non-compliance and associated penalties. The inclusion of Annex A Control A.8.5 and Annex B.7.3 emphasises the importance of providing information to interested parties and the acquisition of data, respectively. By addressing privacy concerns as per Annex C.2.7 and considering the general principles in Annex D.1, organisations can ensure compliance across various sectors.

Maintaining ISO 42001 Certification: Continuous Improvement

Organisations must uphold a steadfast commitment to Requirement 1 by regularly reviewing and enhancing their AI policies and processes. This ensures alignment with the evolving landscape of AI technology and ethics, thereby maintaining the effectiveness of the AI management system.

Conducting Regular Internal Audits

Essential to the ISO 42001 certification is the conduct of regular internal audits as mandated by Requirement 5.16. These audits, which should encompass the control objectives and controls specified in Annex A, enable organisations to critically evaluate their AI management systems and pinpoint areas for improvement. The insights gleaned from these audits are mandatory for making the necessary adjustments to uphold compliance and bolster the AI management system’s performance.

Audit Planning and Execution

In line with A.18, organisations should meticulously plan and execute these audits to ensure comprehensive coverage of the AI management system, including risk management, control effectiveness, and adherence to AI policies and objectives.

The Imperative of Continual Improvement

Continual improvement, as stipulated in Requirement 10.1, is pivotal to the ISO 42001 certification. It propels AI management systems to evolve in response to emerging risks, technological advancements, and regulatory shifts. Organisations are encouraged to cultivate a culture of innovation and learning, enabling proactive adaptation of their AI management practices.

Leveraging Organisational Objectives and Risk Sources

In the spirit of Annex C, organisations should consider the outlined objectives and risk sources to inform their continual improvement strategies, ensuring that their AI management systems are responsive to new challenges and opportunities.

Ensuring Ongoing Compliance with ISO 42001

Organisations are tasked with the following to maintain compliance with ISO 42001:

- Monitoring Governance Changes: Keeping abreast of changes in AI governance requirements is essential, as per Requirement 4.1, to ensure that AI management systems are updated accordingly.

- Engaging in Professional Development: Regular training and professional development, in accordance with Requirement 7.2 and 7.3, are vital for staying informed of AI management best practices.

- Streamlining Compliance Management: The use of tools and platforms, such as ISMS.online, is recommended to streamline compliance management and documentation processes, aligning with the guidance provided in Annex B.

Planning for Compliance

Organisations should integrate these activities into their planning processes as described in Requirement 6, ensuring that the AI management system can achieve its intended outcomes.

Adapting Across Domains and Sectors

The need to monitor AI governance changes and engage in regular training underscores the applicability of the AI management system across various domains or sectors, as highlighted in Annex D. This adaptability is essential for ensuring the AI management system’s relevance and effectiveness in diverse organisational contexts.

Manage all your compliance in one place

ISMS.online supports over 100 standards

and regulations, giving you a single

platform for all your compliance needs.

Addressing Implementation Challenges of ISO 42001

Organisations navigating the complexities of aligning with ISO 42001 standards often grapple with integrating ethical AI principles into their corporate culture and ensuring transparency and accountability of AI systems, as mandated by A.2.2. These challenges are multifaceted, ranging from internal process updates to staff and supplier compliance, each requiring a strategic approach informed by various aspects of the standard.

Overcoming Obstacles in Updating Internal Processes

Organisations aiming to update internal processes must undertake comprehensive reviews of existing workflows, pinpointing integration points for AI management practices. This critical step, rooted in 4.4 and 5.3, involves redefining roles and responsibilities, ensuring staff members are equipped to manage AI-related risks and opportunities effectively, as outlined in A.3.2 and B.3.2.

Ensuring Staff and Supplier Compliance

Achieving compliance among staff and suppliers necessitates a multifaceted approach, anchored in 7.2, 7.3, and 7.4. Organisations should:

- Training and Education: Implement ongoing training programmes, ingraining ISO 42001 requirements into the organisational fabric to foster a culture of compliance.

- Clear Communication: Establish transparent channels for policy changes and compliance expectations, ensuring alignment with A.7.2 and A.10.3.

- Monitoring and Evaluation: Regularly monitor compliance and evaluate the effectiveness of controls, leveraging insights from B.7.2 and B.10.3 to inform practices.

Further Reading

Applications of ISO 42001 in AI Management Systems

Organisations across various industries have successfully integrated ISO 42001 standards into their AI management systems, leading to enhanced ethical practices and governance. By adhering to the controls specified in Annex A, entities have established robust frameworks that govern AI system development, deployment, and monitoring, ensuring that these systems are used responsibly and ethically.

Practical Benefits Observed Post-Certification

Post ISO 42001 certification, organisations have reported tangible benefits, including:

- Strengthened stakeholder confidence in AI applications, aligning with C.2.1 and D.2 regarding the integration of AI management systems with other management system standards.

- Improved risk management strategies, particularly in identifying and mitigating biases in AI systems, which corresponds to A.5 and B.5.2.

- Enhanced compliance with evolving data protection and privacy regulations, reflecting A.7 and B.7.3.

Ethical Development and Use of AI Technologies

ISO 42001 certification has a profound impact on the ethical development and use of AI technologies. It compels organisations to:

- Implement transparent AI processes, as guided by B.2.2 and C.2.11.

- Ensure accountability in AI decision-making, which is a core aspect of C.2.1.

- Uphold privacy and security throughout the AI system lifecycle, in line with A.7 and B.7.4.

Compliance With Global AI Regulations

Organisations seeking to align with international AI regulations find ISO 42001 certification an essential tool. This standard provides a comprehensive set of requirements that reflect global best practices for AI management systems, ensuring that organisations can meet diverse regulatory expectations.

Harmonising AI Governance Globally

ISO 42001 plays a pivotal role in the harmonisation of AI governance by offering a uniform framework for ethical AI development and deployment. It bridges the gaps between different regulatory environments, fostering a common understanding and approach to AI governance. By understanding the organisation and its context (4.1), and the needs and expectations of interested parties (4.2), organisations can determine the scope of their AI management system (4.3) and establish a robust AI management system (4.4). This harmonisation is further supported by the standard’s applicability across various domains or sectors, as outlined in Annex D.

Leveraging ISO 42001 for Legal and Ethical Challenges

Organisations leverage ISO 42001 certification to navigate the complex landscape of legal and ethical AI challenges. The standard’s Annex A controls provide a clear pathway for compliance, addressing critical areas such as data protection (A.4.3), bias mitigation, and accountability in AI systems (A.3.2). These controls are complemented by the implementation guidance provided in Annex B, which includes developing an AI policy that aligns with business strategy and legal requirements (B.2.2), and establishing a process for reporting concerns about AI systems (B.3.3). Additionally, the standard emphasises the importance of assessing the impact of AI systems on individuals (A.5.4) and society (A.5.5), with further guidance on conducting these assessments provided in B.5.2.

The Importance of International Collaboration

International collaboration is crucial for unified AI governance and the widespread acceptance of ISO 42001. By contributing to the development and refinement of the standard, organisations can ensure it reflects a diverse range of perspectives and requirements, facilitating global interoperability and trust in AI technologies. This collaboration is underpinned by a shared understanding of the organisation’s context (4.1) and the needs and expectations of interested parties (4.2). The standard’s flexibility to adapt to various domains or sectors (Annex D) is essential for addressing accountability (C.2.1), AI expertise (C.2.2), environmental impact (C.2.4), the complexity of the environment (C.3.1), and technology readiness (C.3.7).

AI Safety and Security

ISO 42001 certification is instrumental in enhancing the safety and security of AI systems. It mandates a comprehensive set of controls, as detailed in Annex A, which organisations must implement to manage risks effectively throughout the AI system lifecycle.

Mandated Security Measures in ISO 42001

ISO 42001 certification addresses a spectrum of security measures that are essential for the protection of AI systems. These measures include:

- Data Encryption: Ensuring the confidentiality and integrity of data used by AI systems, aligning with A.7.4 and B.7.4, which emphasise the importance of data quality and integrity, and Requirement 3.23, highlighting the preservation of information security.

- Access Controls: Restricting system access to authorised individuals to prevent unauthorised use, corresponding with A.3.2 and B.3.2, focusing on defining and allocating roles and responsibilities for AI systems, and Requirement 7.2, ensuring that individuals are competent and trained.

- Incident Response: Establishing robust procedures for detecting, reporting, and responding to security incidents is in line with A.8.4 and B.8.4, which provide guidance on incident communication plans and procedures, and Requirement 10.2, underscoring the importance of corrective action.

Managing Supply Chain Security

Organisations can leverage ISO 42001 to manage supply chain security by:

- Vendor Assessment: Evaluating suppliers’ compliance with ISO 42001 standards is supported by A.10.3 and B.10.3, guiding the establishment of processes to ensure supplier alignment with responsible AI system development and usage practices, and Requirement 4.2, considering supplier relationships in the context of interested parties’ requirements.

- Contractual Controls: Including ISO 42001 requirements in contracts with third-party vendors is related to A.10.2 and B.10.2, ensuring that responsibilities within the AI system life cycle are clearly defined and allocated, and Requirement 4.4, integrating AI management system requirements into organisational processes.

- Continuous Monitoring: Implementing ongoing surveillance of supply chain risks and vulnerabilities aligns with A.4.6 and B.4.6, documenting competencies for monitoring and managing AI systems, and Requirement 9.1, highlighting the need for ongoing evaluation of AI system performance and supply chain security.

Preparing for ISO 42001 Certification with ISMS.online

ISMS.online, aids in managing documentation and audit preparation for ISO 42001, offers:

-

Centralised Document Management: Organising all necessary documentation in one place, ensuring easy access and control, aligns with Requirement 7.5 on documented information control.

-

Audit Trail Features: Providing clear records of changes and updates to support audit processes aligns with Requirement 9.2 on internal audits, ensuring the AI management system conforms to the organisation’s own requirements and the requirements of the standard.

Benefits of ISMS.online

Our Platform offer several benefits for ISO 42001 compliance management:

-

Structured Framework: Aligning with ISO 42001’s Annex A controls for systematic AI management corresponds to A.4.2 on resource documentation and A.5.5 on processes for responsible AI system design and development.

-

Efficiency: Automating workflows to save time and reduce the potential for human error supports Requirement 6, particularly 5.2 on actions to address risks and opportunities, by automating risk management processes.

-

Compliance Tracking: Monitoring the implementation of required controls and managing compliance status relates to Requirement 9.1 on monitoring, measurement, analysis, and evaluation, ensuring the effectiveness of the AI management system.

Leveraging Technology for the ISO 42001 Certification Process

Technology plays a required role in the ISO 42001 certification process by:

-

Streamlining Communication: Facilitating collaboration among team members working on certification efforts supports Requirement 7.4 on communication, which includes determining the internal and external communications relevant to the AI management system.

-

Enhancing Visibility: Providing dashboards and reporting tools for real-time insights into the AI management system’s status aligns with B.9.1 on objectives for ensuring transparency in AI system operations and providing explanations of important factors influencing system results.

-

Risk Management Tools: Assisting in the identification, assessment, and treatment of AI-related risks in line with Annex A controls corresponds to A.5.3 on objectives for responsible development of AI systems and C.3.4 on risk sources related to machine learning, ensuring that risks are identified and managed effectively.

Organisations can also benefit from the guidance provided in Annex D on the use of the AI management system across domains or sectors, ensuring that the AI management system is applicable and effective in various operational contexts.

Partnering with ISMS.online

Our Platform offers a suite of resources designed to facilitate continuous improvement and maintenance of ISO 42001 certification:

Document Control

- Centralised management of policies, procedures, and records to ensure compliance with Requirement 7.5.

- Supports the documentation and implementation of data management processes as outlined in A.7.2.

Risk Management

- Tools to identify, assess, and treat AI-related risks in accordance with Requirement 6.1 and Annex A Controls.

- Facilitates the documentation of human resources competences utilised for the AI system, aligning with A.4.6.

Audit Management

- Features to plan, conduct, and review internal audits, streamlining the certification process and adhering to Requirement 9.2.

Choosing ISMS.online for Compliance and Certification Management

Organisations choose ISMS.online for its:

User-Friendly Interface

- Simplifies the navigation and management of the AI governance framework, enhancing the competence of personnel as per B.4.6.

Customizable Workflows

- Adapts to the unique needs of each organisation while maintaining compliance with ISO 42001, supporting the documentation of specific processes for responsible AI system design and development in line with B.5.5.

Collaborative Environment

- Enables teams to work together effectively on certification-related tasks, aligning with the implementation guidance for reporting concerns about the organisation’s role with respect to an AI system as per B.3.3.

Getting Started with ISMS.online

To begin enhancing AI governance and performance with ISMS.online, organisations can:

- Register for a Demo

- Explore the platform's features and understand how it aligns with ISO 42001 requirements, including integration with other management systems across various domains or sectors as per D.2.

- Set Up an Account

- Customise the platform to fit the organisation's specific AI management needs, addressing transparency and explainability, which are potential AI-related organisational objectives as per C.2.11.

- Utilise Training Resources

- Access training materials to maximise the benefits of ISMS.online for ISO 42001 certification efforts, aligning with the objective of developing AI expertise within the organisation as per C.2.2.