Understanding ISO 42001 Annex A Control A.9 – Use of AI Systems

ISO 42001 Annex A Control A.9 is designed to ensure that organisations use Artificial Intelligence (AI) systems responsibly, aligning with organisational policies and broader AI governance frameworks. This control emphasises the importance of establishing clear processes and objectives for AI use, ensuring compliance with intended purposes, and documenting all relevant procedures and outcomes.

Purpose and Contribution to Responsible AI Use

The primary purpose of Annex A Control A.9 is to guide organisations in the ethical, transparent, and accountable use of AI systems. By setting a global standard, it aims to foster trust and reliability in AI technologies across various sectors. This control contributes to responsible AI use by mandating detailed documentation, risk management strategies, and continuous monitoring of AI systems’ performance.

Key Components for Compliance Officers

For compliance officers, the key components of A.9 to note include:

- Processes for Responsible Use: Defining and documenting how AI systems should be responsibly used within the organisation.

- Objectives for Responsible Use: Identifying and documenting the goals guiding the ethical use of AI, such as fairness, accountability, and transparency.

- Intended Use Compliance: Ensuring AI systems are used as intended, with necessary documentation and monitoring in place.

Alignment with Broader AI Governance Frameworks

Annex A Control A.9 aligns with broader AI governance frameworks by integrating with other ISO standards, such as ISO 27001 and ISO 9001, and global AI legislation. This ensures a unified approach to managing AI risks, enhancing system security, and promoting ethical AI use across all organisational levels. Through this alignment, organisations can achieve a comprehensive and consistent framework for AI management, addressing ethical considerations, data privacy, and security risks effectively.

Book a demoProcesses for Responsible Use of AI Systems – A.9.2

Defining and Documenting Processes

Under ISO 42001 Annex A Control A.9.2, organisations are required to establish and document specific processes for the responsible use of AI systems. These processes must encompass considerations such as required approvals, cost implications, sourcing requirements, and adherence to legal and regulatory standards. It’s imperative for these processes to be clearly defined, documented, and accessible to relevant stakeholders within the organisation.

Implementation and Oversight

Effective implementation of these documented processes is crucial. Organisations can achieve this by integrating these processes into their existing management systems, ensuring they are aligned with organisational goals and compliant with relevant standards. Compliance officers play a pivotal role in this context, overseeing the adherence to these processes, conducting regular reviews, and facilitating updates as necessary to respond to evolving regulatory and operational landscapes.

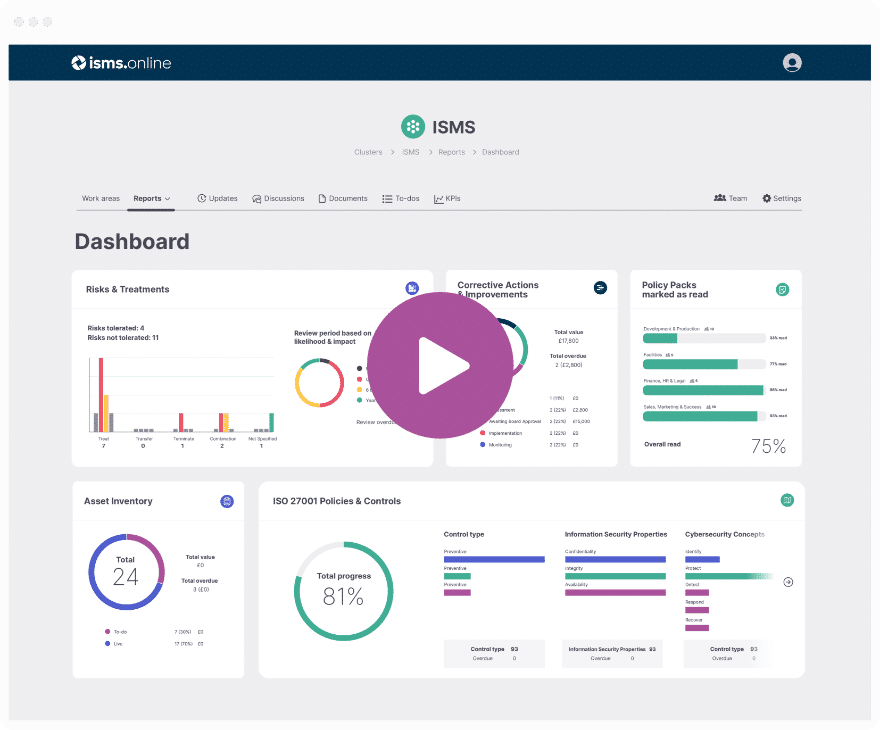

Role of ISMS.online

ISMS.online significantly aids in the documentation and management of processes for the responsible use of AI systems. Our platform offers tools and templates that streamline the creation, storage, and sharing of documentation. Additionally, ISMS.online enhances collaboration among team members, enabling efficient updates and compliance tracking. By leveraging our platform, organisations can ensure their processes for AI use are not only well-documented but also effectively implemented and monitored, aligning with ISO 42001 requirements.

Everything you need

for ISO 42001

Manage and maintain your ISO 42001 Artificial Intelligence Management System with ISMS.online

Book a demoObjectives for Responsible Use of AI System – A.9.3

Identifying and Documenting Objectives

Under ISO 42001 Annex A Control A.9.3, the establishment of clear objectives is crucial for guiding the responsible use of AI systems. These objectives typically encompass fairness, accountability, transparency, explainability, reliability, safety, robustness, privacy, security, and accessibility. To identify and document these objectives, organisations should consider their specific context, including the operational, legal, and ethical landscape in which they operate. This process involves engaging stakeholders across various departments to ensure a comprehensive understanding of what responsible AI use means for the organisation.

Challenges in Aligning AI Use with Objectives

Organisations might encounter challenges in aligning AI use with these objectives due to the dynamic nature of AI technologies and the complexity of ethical considerations. Balancing innovation with ethical constraints, managing evolving regulatory requirements, and ensuring stakeholder consensus can pose significant hurdles.

Benefits of Establishing Clear Objectives

Establishing clear objectives for the responsible use of AI systems benefits overall AI system management by providing a structured framework for decision-making and operational processes. It ensures that AI deployments are aligned with organisational values and regulatory requirements, thereby mitigating risks and enhancing trust among users and stakeholders. Moreover, clear objectives facilitate the development of specific strategies for achieving responsible AI use, including mechanisms for human oversight and performance monitoring, which are essential for maintaining compliance and fostering an ethical AI ecosystem.

Intended Use of the AI System – A.9.4

Ensuring Compliance with Intended Use

ISO 42001 Annex A Control A.9.4 mandates that AI systems must be utilised in accordance with their designated purposes, as outlined in their accompanying documentation. This directive serves to align the deployment and operation of AI technologies with predefined objectives, thereby mitigating risks associated with misuse or unintended applications. To support compliance, organisations are required to maintain detailed records, including deployment instructions and performance expectations, which are instrumental in guiding the responsible use of AI systems.

Required Documentation

To adhere to A.9.4, organisations must compile and preserve comprehensive documentation that delineates the intended use of AI systems. This encompasses deployment guidelines, resource requirements, and criteria for human oversight. Such documentation not only facilitates accurate system performance but also ensures that AI applications align with legal and ethical standards.

Monitoring and Enforcement Mechanisms

Effective monitoring of AI systems is critical for ensuring they operate as intended. Organisations can implement automated tools and human oversight to regularly assess AI performance against documented expectations. In instances where deviations are detected, predefined protocols for addressing and rectifying these discrepancies should be activated, thereby safeguarding against potential impacts on stakeholders and compliance obligations.

Addressing Deviations from Intended Use

To manage deviations from intended use, organisations should establish clear mechanisms for reporting and rectifying non-compliance. This includes maintaining event logs and other records that can demonstrate adherence to intended use or facilitate the investigation of issues. By fostering an environment of transparency and accountability, organisations can swiftly respond to deviations, ensuring AI systems continue to operate within their defined ethical and operational parameters.

Manage all your compliance in one place

ISMS.online supports over 100 standards

and regulations, giving you a single

platform for all your compliance needs.

Risk Management Strategies for AI Systems

Unique Risks Associated with AI Systems

AI systems, by their nature, introduce a set of unique risks that differ significantly from traditional IT systems. These include ethical considerations, such as bias and fairness; security risks, particularly in data privacy; and operational risks, including reliability and robustness. Under ISO 42001, recognising and addressing these risks is paramount for responsible AI use.

Dynamic Risk Identification and Analysis

Dynamic risk identification and analysis involve continuously monitoring AI systems for new and evolving risks. This process requires a comprehensive understanding of the AI system’s lifecycle, from development to deployment and maintenance. By employing tools like automated risk assessment software, organisations can detect potential issues in real-time, ensuring that risk management strategies remain relevant and effective.

Tailored Strategies for AI-Specific Risks

To address AI-specific risks, tailored strategies that consider the unique characteristics of AI systems are essential. This includes implementing ethical AI guidelines to mitigate bias, enhancing data protection measures for privacy, and establishing robust monitoring systems for reliability. Each strategy should be customised to the organisation’s specific AI applications and operational context.

Effective Risk Management and Responsible AI Use

Effective risk management is a cornerstone of responsible AI use. By identifying, analysing, and addressing AI-specific risks, organisations can ensure that their AI systems operate ethically, securely, and reliably. This not only aligns with ISO 42001 standards but also builds trust among users and stakeholders, fostering a culture of accountability and continuous improvement in AI system management. At ISMS.online, we provide the tools and guidance necessary to implement these risk management strategies effectively, supporting your organisation’s journey towards responsible AI use.

Everything you need

for ISO 42001

Manage and maintain your ISO 42001 Artificial Intelligence Management System with ISMS.online

Book a demoOperational Planning and Resource Allocation

Strategic Approaches for AI System Objectives Setting

For setting objectives in AI system initiatives, a strategic approach is essential. This involves aligning AI objectives with the broader organisational goals and ensuring they are SMART (Specific, Measurable, Achievable, Relevant, Time-bound). At ISMS.online, we advocate for a participatory process where stakeholders from various departments contribute to defining these objectives, ensuring a holistic view of how AI can serve the organisation’s mission.

Ensuring Adaptability in AI Initiatives

Adaptability is key in the rapidly evolving field of AI. Organisations must foster a culture of continuous learning and flexibility to adapt AI strategies as new technologies emerge and regulatory landscapes change. This includes regular reviews of AI objectives and the readiness to pivot strategies in response to new insights or external pressures.

Considerations for Resource Allocation

Effective resource allocation for AI initiatives requires a careful assessment of the necessary tools, technologies, and talent. Organisations should consider not only the initial investment in AI technologies but also the ongoing costs for maintenance, updates, and training. Prioritising investments that offer the most significant impact on achieving AI objectives is crucial.

Impact of Operational Planning on the AI System Lifecycle

Operational planning significantly impacts the lifecycle of AI systems. It ensures that resources are efficiently used, risks are managed proactively, and AI initiatives are scalable and sustainable. Through meticulous planning, organisations can avoid common pitfalls such as resource shortages, misaligned objectives, and compliance issues, thereby enhancing the longevity and effectiveness of AI systems.

Further Reading

Performance Evaluation and Internal Auditing

Recommended Methods for Monitoring AI System Performance

For effective monitoring and measuring of AI system performance, a combination of quantitative and qualitative methods is recommended. Quantitatively, key performance indicators (KPIs) should be established, directly linked to the AI system’s objectives. Qualitatively, regular feedback from users and stakeholders provides insights into the system’s impact and areas for improvement. Together, these methods ensure a comprehensive understanding of the AI system’s performance.

Structuring Internal Audits for AI System Compliance

Internal audits are structured through a systematic review of the AI system’s adherence to established policies, procedures, and standards. This involves examining documentation, interviewing personnel, and testing AI system outputs against expected results. Audits should be scheduled at regular intervals and after significant changes to the AI system or its operating environment.

Role of Management Reviews in the Evaluation Process

Management reviews play a critical role in the evaluation process by providing an opportunity for senior leadership to assess the overall effectiveness of the AI system and its compliance with ISO 42001. These reviews should consider audit findings, performance data, and stakeholder feedback to make informed decisions about necessary adjustments or improvements.

Support from ISMS.online

At ISMS.online, we offer tools and resources that streamline the performance evaluation and internal auditing processes. Our platform facilitates the documentation of KPIs, the scheduling of audits, and the collection and analysis of feedback. With ISMS.online, you can ensure that your AI system’s performance is continuously monitored, and compliance with ISO 42001 is maintained, fostering a culture of continuous improvement and responsible AI use.

Continual Improvement in AI System Management

Strategies for Recurring Enhancement

To foster recurring enhancement of AI systems, organisations should adopt a cyclical approach to improvement, akin to the Plan-Do-Check-Act (PDCA) cycle. This involves regularly reviewing AI system performance, identifying areas for enhancement, implementing changes, and evaluating the impact of those changes. At ISMS.online, we emphasise the importance of integrating continual improvement into the AI lifecycle, ensuring that AI systems evolve in alignment with technological advancements and changing organisational needs.

Adapting to Evolving AI Landscapes

Adaptation to the evolving AI landscape requires a proactive stance on innovation and a commitment to lifelong learning within the organisation. Staying abreast of emerging AI technologies and methodologies enables organisations to make informed decisions about incorporating new features or capabilities into existing AI systems. Our platform supports strategic decision-making by providing resources and tools that help you monitor AI trends and assess their relevance to your organisational goals.

The Role of Feedback in Improvement

Feedback from users, stakeholders, and the AI system itself is invaluable for continual improvement. It provides direct insights into the system’s performance, user satisfaction, and potential areas for enhancement. Encouraging an open feedback culture and establishing mechanisms for collecting and analysing feedback ensures that improvement efforts are data-driven and aligned with user needs.

Documenting and Applying Lessons Learned

Effective documentation of lessons learned is crucial for capitalising on past experiences and avoiding repeated mistakes. Organisations should maintain records of improvement initiatives, their outcomes, and any challenges encountered. This documentation serves as a knowledge base for future improvement efforts and helps institutionalise the learning process. At ISMS.online, our platform facilitates the documentation and sharing of lessons learned, enabling organisations to apply these insights systematically for continuous AI system enhancement.

Documentation and Compliance for ISO 42001 Certification

Essential Documentation for Demonstrating Compliance

For organisations aiming to demonstrate compliance with ISO 42001, maintaining comprehensive documentation is crucial. This includes policies, procedures, risk assessments, impact analyses, and records of continual improvement efforts. Documentation serves as evidence of the organisation’s commitment to responsible AI use and management, aligning with the ISO 42001 framework.

Preparing for ISO 42001 Certification Audits

Preparation for ISO 42001 certification audits involves a thorough review of all relevant documentation, ensuring that AI systems are deployed and managed according to the standard’s requirements. Organisations should conduct internal audits to identify and address any gaps in compliance. Training staff on ISO 42001 principles and practices is also essential to ensure everyone understands their role in maintaining compliance.

Benefits of ISO 42001 Certification

ISO 42001 certification offers numerous benefits, including enhanced trust among stakeholders, improved risk management, and a competitive edge in the marketplace. Certification demonstrates an organisation’s dedication to ethical, transparent, and secure AI use, fostering confidence among customers, partners, and regulatory bodies.

Simplifying Compliance and Certification with ISMS.online

At ISMS.online, we streamline the compliance and certification process for ISO 42001. Our platform provides templates, tools, and resources to efficiently manage documentation, conduct risk assessments, and implement continual improvement processes. By leveraging ISMS.online, organisations can navigate the complexities of ISO 42001 compliance with ease, ensuring a smooth path to certification and beyond.

Ethical Considerations and Bias Mitigation in AI Systems

ISO 42001 addresses ethical considerations in AI use by setting forth a framework that emphasises fairness, accountability, transparency, and privacy. These principles guide organisations in developing AI systems that respect human rights and avoid harm. To mitigate bias and ensure accountability, ISO 42001 recommends implementing diverse datasets, conducting regular audits, and establishing clear accountability mechanisms.

Strategies for Bias Mitigation and Accountability

To combat bias, organisations should employ strategies such as:

- Diverse Data Collection: Ensuring datasets are representative of all affected demographics to reduce bias.

- Regular Audits: Conducting periodic reviews of AI systems to identify and correct biases.

- Transparent Reporting: Making the methodologies, datasets, and algorithms used in AI systems accessible for scrutiny.

Integrating Ethical AI Governance

Integrating ethical AI governance involves:

- Establishing Ethical Guidelines: Creating clear policies that outline ethical considerations in AI development and use.

- Training and Awareness: Educating employees about the importance of ethics in AI and their role in upholding these standards.

- Stakeholder Engagement: Involving users, customers, and other stakeholders in discussions about ethical AI use.

Challenges in Ensuring Ethically Responsible AI Systems

Ensuring AI systems are ethically responsible can present challenges, including:

- Complexity of AI Technologies: The intricate nature of AI can make understanding and controlling all aspects difficult.

- Evolving Ethical Standards: As societal norms and regulations change, keeping AI systems aligned with these standards requires continual effort.

- Balancing Innovation with Ethics: Finding the right balance between advancing AI technologies and adhering to ethical principles can be challenging.

ISO 42001 Annex A Controls

| ISO 42001 Annex A Control | ISO 42001 Annex A Control Name |

|---|---|

| ISO 42001 Annex A Control A.2 | Policies Related to AI |

| ISO 42001 Annex A Control A.3 | Internal Organization |

| ISO 42001 Annex A Control A.4 | Resources for AI Systems |

| ISO 42001 Annex A Control A.5 | Assessing Impacts of AI Systems |

| ISO 42001 Annex A Control A.6 | AI System Life Cycle |

| ISO 42001 Annex A Control A.7 | Data for AI Systems |

| ISO 42001 Annex A Control A.8 | Information for Interested Parties of AI Systems |

| ISO 42001 Annex A Control A.9 | Use of AI Systems |

| ISO 42001 Annex A Control A.10 | Third-Party and Customer Relationships |

Contact ISMS.online for ISO 42001 Compliance

At ISMS.online, we understand the complexities and challenges your organisation faces in achieving ISO 42001 compliance, especially when it comes to the responsible use of AI systems. Our platform is designed to simplify this process, providing you with the tools and resources necessary for effective AI Management Systems (AIMS).

How ISMS.online Supports ISO 42001 Compliance

Our platform offers a comprehensive suite of tools that streamline the documentation, risk assessment, and management review processes essential for ISO 42001 compliance. With ISMS.online, you can easily maintain records of your AI systems’ lifecycle, from development to deployment, ensuring all activities are aligned with ISO standards.

Tools and Resources for AI Management Systems

ISMS.online provides a range of tools and resources tailored for AI Management Systems, including templates for risk assessments, impact analyses, and policy documentation. Our platform facilitates collaboration among team members, enabling efficient management of AI-related tasks and ensuring that your AI systems meet the ethical, transparent, and trustworthy criteria set by ISO 42001.

Why Choose ISMS.online

Choosing ISMS.online for your AI system management and compliance needs means opting for a platform that combines ease of use with comprehensive functionality. Our platform not only helps you achieve compliance with ISO 42001 but also enhances your overall AI governance strategy, making it easier to manage risks, document processes, and engage with stakeholders.

Getting Started with ISMS.online

To enhance your AI governance strategy and start your journey towards ISO 42001 compliance, getting started with ISMS.online is straightforward. Our team is ready to assist you in setting up your account, guiding you through the platform's features, and providing support whenever you need it. Contact us today to learn more about how we can help your organisation navigate the complexities of ISO 42001 compliance and achieve excellence in AI system management.

Book a demo