What Is ISO 42001 Annex A Control A.5 – Assessing Impacts of AI Systems

ISO 42001 Annex A Control A.5 is designed to ensure that organisations assess the impacts of AI systems throughout their lifecycle. This control is integral to the broader ISO 42001 framework, which sets out requirements for an AI management system. By focusing on the assessment of AI systems’ impacts, Control A.5 aligns with the framework’s goal of promoting ethical, transparent, and responsible AI use.

Critical Nature of Impact Assessment for Compliance

Assessing the impacts of AI systems is not just a regulatory requirement; it’s a critical component of ethical AI management. This process helps organisations identify and mitigate potential negative consequences on individuals, groups, and society at large. Compliance with Control A.5 ensures that AI systems are developed and deployed in a manner that respects human rights and adheres to ethical principles.

Contribution to Ethical AI Management

Control A.5 contributes significantly to ethical AI management by mandating a thorough evaluation of AI systems’ potential impacts. This evaluation encompasses considerations for fairness, accountability, transparency, and the mitigation of bias. By adhering to this control, organisations can demonstrate their commitment to responsible AI practices, fostering trust among users and stakeholders.

Everything you need

for ISO 42001

Manage and maintain your ISO 42001 Artificial Intelligence Management System with ISMS.online

Book a demoThe AI System Impact Assessment Process – A.5.2

Steps Required to Establish a Comprehensive AI Impact Assessment Process

To establish a comprehensive AI impact assessment process, organisations must:

- Identify Triggers: Determine the circumstances under which an AI system impact assessment is necessary. This could include significant changes to the AI system’s purpose, complexity, or the sensitivity of data processed.

- Define the Assessment Elements: Outline the components of the impact assessment process, including identification of sources, events, and outcomes; analysis of consequences and likelihood; evaluation of acceptance decisions; and mitigation measures.

- Assign Responsibilities: Specify who within the organisation will perform the impact assessments, ensuring they have the necessary expertise and authority.

- Utilise the Assessment: Determine how the results will inform the design or use of the AI system, including triggering necessary reviews and approvals.

Ensuring a Thorough and Effective Assessment Process

Organisations can ensure their assessment process is thorough and effective by:

- Incorporating Diverse Perspectives: Engage a range of stakeholders, including subject matter experts, users, and potentially impacted individuals, to gain a comprehensive understanding of the AI system’s impacts.

- Adopting a Continuous Improvement Approach: Regularly review and update the assessment process to reflect new insights, technological advancements, and changes in societal norms.

Addressing Potential Consequences for Individuals and Societies

The assessment process addresses potential consequences for individuals and societies by:

- Evaluating impacts on legal positions, life opportunities, physical and psychological well-being, universal human rights, and societal norms.

- Considering specific protection needs of vulnerable groups such as children, the elderly, and workers.

How ISMS.online Help

At ISMS.online, we provide tools and frameworks that streamline the establishment of an AI system impact assessment process by:

- Offering templates and checklists to guide the identification and documentation of assessment triggers and elements.

- Providing a platform for collaborative engagement with stakeholders, ensuring a diverse range of perspectives is considered.

- Enabling easy documentation, retention, and review of assessment results, supporting continuous improvement and compliance efforts.

By following these steps and leveraging ISMS.online, organisations can establish a robust AI system impact assessment process that not only meets compliance requirements but also contributes to the ethical management of AI technologies.

Manage all your compliance in one place

ISMS.online supports over 100 standards

and regulations, giving you a single

platform for all your compliance needs.

Documentation of AI System Impact Assessments – A.5.3

Requirements for Documenting AI System Impact Assessments

For organisations navigating the complexities of AI system impact assessments, ISO 42001 Annex A Control A.5.3 mandates the meticulous documentation of assessment outcomes. This documentation should encompass the intended use of the AI system, any foreseeable misuse, the positive and negative impacts identified, and measures taken to mitigate potential failures. Additionally, it should detail the demographic groups affected by the system, its complexity, and the role of human oversight in mitigating negative impacts.

Importance of Retaining Assessment Results

Retaining the results of AI system impact assessments is crucial for organisations to demonstrate compliance with ethical standards and regulatory requirements. This retention supports accountability and transparency, enabling organisations to respond to inquiries and audits effectively. It also plays a vital role in continuous improvement processes, allowing for the review and refinement of AI systems based on past assessments.

Determining the Retention Duration

The duration for which assessment results should be retained varies, influenced by legal requirements, organisational policies, and the specific characteristics of the AI system. Organisations must balance these factors, often opting for retention periods that exceed minimum legal requirements to ensure comprehensive risk management.

How ISMS.online Supports Compliance

At ISMS.online, we understand the critical nature of documenting and retaining AI system impact assessments. Our platform offers robust documentation capabilities and secure storage solutions, ensuring that your organisation can easily meet ISO 42001 requirements. With features designed to streamline the documentation process and customizable retention schedules, ISMS.online empowers your organisation to maintain compliance efficiently and effectively.

Assessing AI System Impact on Individuals or Groups of Individuals – A.5.4

When conducting AI system impact assessments, it’s imperative to consider the potential effects on individuals and groups. This involves a multifaceted approach that addresses various aspects of fairness, accountability, transparency, and the specific protection needs of vulnerable populations.

Considerations for Assessing AI’s Impact on Individuals

Organisations must evaluate how AI systems might affect the legal position, life opportunities, and the physical or psychological well-being of individuals. This includes assessing the potential for AI systems to impact universal human rights and societal norms. It’s crucial to consider both direct and indirect impacts, ensuring a comprehensive understanding of how AI technologies influence individuals’ lives.

Ensuring Inclusive Assessments

To ensure assessments are inclusive and consider specific protection needs, organisations should:

- Engage with diverse stakeholders, including those from vulnerable groups, to understand their concerns and perspectives.

- Incorporate guidelines that specifically address the needs of children, the elderly, individuals with disabilities, and other groups that might be disproportionately affected by AI systems.

The Role of Human Oversight

Human oversight plays a critical role in mitigating negative impacts. This involves:

- Implementing mechanisms for human intervention in AI system operations, ensuring that decisions can be reviewed and altered by humans when necessary.

- Establishing clear accountability structures, so individuals and teams understand their responsibilities in overseeing AI systems.

Structuring Assessments for Fairness, Accountability, and Transparency

Assessments should be structured to:

- Evaluate how AI systems uphold principles of fairness, ensuring that they do not perpetuate or exacerbate biases.

- Ensure accountability by identifying who is responsible for the AI system’s impacts and establishing mechanisms for addressing any issues that arise.

- Promote transparency by making the criteria and processes used by AI systems understandable to stakeholders, including those impacted by the system’s decisions.

At ISMS.online, we provide the tools and guidance necessary for organisations to conduct thorough and inclusive AI system impact assessments. Our platform supports the documentation and management of assessments, helping you address the specific needs of individuals and groups while ensuring compliance with ISO 42001 Annex A Control A.5.

Everything you need

for ISO 42001

Manage and maintain your ISO 42001 Artificial Intelligence Management System with ISMS.online

Book a demoAssessing Societal Impacts of AI Systems – A.5.5

Understanding Societal Impact in the Context of AI Systems

Societal impact refers to the broad effects that AI systems can have on various aspects of society, including environmental sustainability, economic factors, governance, health and safety, and cultural norms. These impacts can be both positive, such as improving access to services, and negative, such as exacerbating inequalities.

Approaches to Assessing Societal Impacts

Organisations can assess the societal impacts of AI systems by:

- Conducting Comprehensive Reviews: This involves evaluating the AI system’s potential effects on environmental sustainability, economic conditions, and social structures.

- Engaging with Stakeholders: Collaborating with a diverse range of stakeholders, including community representatives and subject matter experts, to gain insights into the societal implications of AI systems.

AI Systems’ Influence on Environmental Sustainability and Economic Factors

AI systems can significantly influence environmental sustainability by optimising resource use and reducing waste. Economically, they can impact employment patterns, productivity, and access to financial services. However, they also pose risks such as increasing energy consumption and potentially widening economic disparities.

How ISMS.online Can Help

At ISMS.online, we provide a platform that facilitates the documentation and management of societal impact assessments. Our tools enable you to:

- Document Findings: Easily record the results of societal impact assessments, ensuring they are accessible for review and compliance purposes.

- Manage Stakeholder Engagement: Coordinate stakeholder consultations, capturing diverse perspectives on the societal implications of AI systems.

- Track Mitigation Measures: Monitor the implementation of strategies to address negative societal impacts, supporting continuous improvement.

By leveraging ISMS.online, you’re equipped to conduct thorough societal impact assessments, ensuring your AI systems contribute positively to society while mitigating potential harms.

Continuous Improvement and Learning in AI Impact Assessments

Fostering a Culture of Continuous Learning

Organisations can foster a culture of continuous learning in AI impact assessments by encouraging open dialogue and knowledge sharing among team members. Regular training sessions and workshops can keep staff updated on the latest AI developments and ethical considerations. Additionally, creating a safe environment for employees to express concerns and share insights can drive innovation and ethical awareness in AI projects.

Frameworks Supporting Adaptive and Scalable AI Systems

Adopting frameworks that support adaptive and scalable AI systems is crucial. The PDCA (Plan-Do-Check-Act) cycle is an effective approach for continuous improvement, allowing organisations to plan AI impact assessments, implement them, check the outcomes, and act on the insights gained. This iterative process ensures AI systems remain aligned with ethical standards and organisational goals over time.

Contribution of Continuous Improvement to Ethical AI Deployment

Continuous improvement contributes significantly to ethical AI deployment. By regularly reviewing and updating AI impact assessments, organisations can identify and mitigate new risks, ensuring AI systems continue to operate within ethical boundaries. This ongoing process helps maintain transparency, accountability, and fairness in AI applications.

Enhancing AI Impact Assessments with Feedback Loops

Incorporating feedback loops into AI impact assessments enhances their effectiveness. Feedback from stakeholders, including users, ethicists, and regulatory bodies, provides valuable insights that can inform future assessments. This collaborative approach ensures diverse perspectives are considered, leading to more comprehensive and ethical AI solutions.

At ISMS.online, we understand the importance of continuous improvement and learning in AI impact assessments. Our platform provides the tools and resources you need to implement adaptive frameworks, foster a culture of continuous learning, and integrate feedback loops into your AI impact assessment processes.

Role of Advanced Tools in Streamlining AI Impact Assessments

Streamlining the AI Impact Assessment Process with ISMS.online

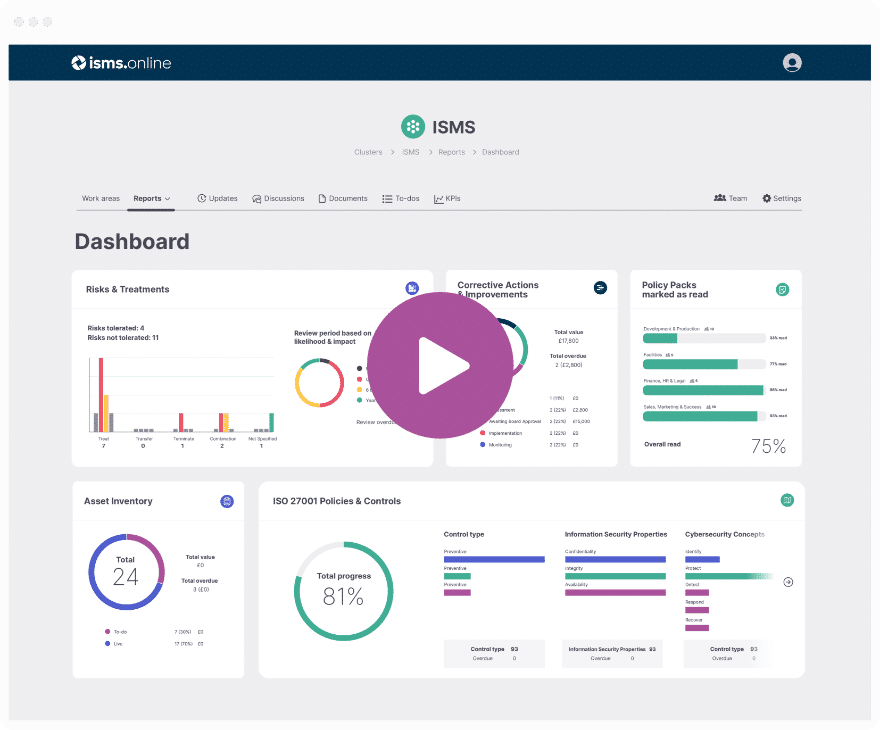

Advanced tools like ISMS.online significantly streamline the AI impact assessment process. By providing a centralised platform, we enable you to efficiently manage and conduct comprehensive assessments. Our system facilitates the organisation, analysis, and reporting of AI impact data, ensuring a seamless workflow from start to finish.

Features Supporting Dynamic Risk Management and Policy Control

ISMS.online comes equipped with features designed for dynamic risk management and policy control, including:

- Automated Risk Assessment Tools: These tools help identify and evaluate the potential risks associated with AI systems, enabling proactive management.

- Policy Management Modules: Our platform allows for the creation, dissemination, and monitoring of policies related to AI ethics and compliance, ensuring that all stakeholders are aligned and informed.

Facilitating Real-Time Compliance Tracking and Documentation

Real-time compliance tracking and documentation are critical components of effective AI impact assessments. ISMS.online provides:

- Compliance Dashboards: Offering real-time insights into your compliance status with ISO 42001 and other relevant standards, helping you stay ahead of requirements.

- Document Management Systems: These systems ensure that all documentation related to AI impact assessments is securely stored, easily accessible, and up to date.

Considerations When Selecting Tools for AI Impact Assessments

When selecting tools for AI impact assessments, consider:

- Scalability: The tool should grow with your organisation and adapt to increasing complexity in AI applications.

- User-Friendliness: Ensure the platform is intuitive and accessible for all team members, regardless of their technical expertise.

- Integration Capabilities: The tool should seamlessly integrate with existing systems and workflows, enhancing rather than disrupting your current operations.

At ISMS.online, we understand the importance of these considerations and have designed our platform to meet the needs of organisations at the forefront of AI deployment and management.

Further Reading

Integrating AI Impact Assessments with Organisational Risk Management

The Role of AI Impact Assessments in Risk Management Strategies

Integrating AI impact assessments into broader risk management strategies is essential for organisations aiming to navigate the complexities of AI deployment responsibly. This integration ensures that the potential risks associated with AI systemsranging from ethical dilemmas to compliance issuesare identified, evaluated, and mitigated effectively. By embedding AI impact assessments into the risk management framework, organisations can proactively address the multifaceted risks AI technologies pose.

Compliance Officers: Facilitators of Integration

Compliance officers play a pivotal role in this integration process. They are tasked with ensuring that AI impact assessments are conducted in alignment with both internal policies and external regulatory requirements. Their expertise in legal and regulatory standards makes them indispensable in identifying potential compliance risks and guiding the organisation through the mitigation process.

Enhancing Organisational Governance and AI Ethics

The integration of AI impact assessments with risk management enhances organisational governance by promoting transparency, accountability, and ethical decision-making. It ensures that decisions regarding AI systems are made with a comprehensive understanding of their potential impacts, aligning with the organisation’s ethical standards and societal expectations.

Overcoming Challenges in Integration

Organisations may face challenges such as resource constraints, lack of expertise, and resistance to change. To overcome these challenges, it is crucial to foster a culture of continuous learning and improvement. Providing training and resources to staff, leveraging external expertise when necessary, and communicating the value of ethical AI deployment can facilitate smoother integration.

At ISMS.online, we support organisations in this integration process by providing a platform that simplifies the documentation, management, and communication of AI impact assessments. Our tools enable compliance officers to efficiently align AI risk management with broader organisational strategies, ensuring that AI technologies are deployed responsibly and ethically.

Ethical Considerations in AI Impact Assessments

Guiding Ethical Principles for AI Impact Assessments

Ethical principles that should guide AI impact assessments include fairness, accountability, transparency, and respect for privacy and human rights. These principles ensure that AI systems are developed and deployed in a manner that benefits society while minimising harm.

Upholding Universal Human Rights

Organisations can ensure their AI systems uphold universal human rights by incorporating human rights impact assessments into the AI system design and deployment process. This involves evaluating how AI applications affect rights such as privacy, freedom of expression, and non-discrimination, and taking steps to mitigate any negative impacts.

Implications on Privacy and Data Protection

AI systems often process vast amounts of personal data, raising significant privacy and data protection concerns. Organisations must adhere to data protection laws and principles, such as data minimization and purpose limitation, to protect individuals’ privacy rights. Implementing robust data governance frameworks and ensuring transparency in data processing activities are crucial steps in this direction.

Informing AI System Design and Deployment

Ethical considerations should inform the entire lifecycle of AI systems, from initial design to deployment and beyond. This includes integrating ethical impact assessments into the development process, engaging with stakeholders to understand potential impacts, and continuously monitoring and updating AI systems to address ethical concerns as they arise.

At ISMS.online, we provide the tools and resources you need to integrate ethical considerations into your AI impact assessments. Our platform supports your efforts to document, manage, and communicate the ethical implications of AI systems, ensuring that your organisation remains committed to responsible AI deployment.

Navigating Legal and Regulatory Compliance in AI Impact Assessments

Legal and Regulatory Standards in AI Impact Assessments

In conducting AI impact assessments, it’s crucial to consider a broad spectrum of legal and regulatory standards. These include data protection laws such as the General Data Protection Regulation (GDPR) in the EU, which mandates transparency and accountability in AI systems processing personal data. Additionally, sector-specific regulations may apply, depending on the AI application area.

Influence of Global Legislation and Standards

Global legislation, like the EU AI Act, sets a precedent for stringent compliance requirements, categorising AI systems based on their risk levels and imposing corresponding obligations. Such frameworks significantly influence AI impact assessments, necessitating a thorough understanding of the AI system’s classification and the specific requirements that apply.

Challenges in Ensuring Legal Compliance

Compliance officers face the challenge of staying abreast of rapidly evolving AI regulations across different jurisdictions. Ensuring that AI impact assessments address all relevant legal and regulatory requirements demands continuous learning and adaptation. Moreover, interpreting how general principles apply to specific AI applications can be complex.

How ISMS.online Assists in Navigating Legal and Regulatory Landscapes

At ISMS.online, we provide a comprehensive platform that supports compliance officers in navigating the legal and regulatory landscapes of AI. Our platform offers:

- Documentation Tools: Streamline the documentation of AI impact assessments, ensuring all legal and regulatory considerations are thoroughly recorded.

- Regulatory Updates: Stay informed about the latest developments in AI legislation and standards, helping you ensure your assessments remain compliant.

- Collaboration Features: Facilitate collaboration among team members and external experts, enabling a more comprehensive understanding of legal requirements and how they apply to your AI systems.

By leveraging ISMS.online, you’re equipped to meet the challenges of legal and regulatory compliance in AI impact assessments, ensuring your organisation’s AI initiatives are both innovative and compliant.

Practical Challenges and Solutions in Conducting AI Impact Assessments

Common Practical Challenges

Conducting AI impact assessments presents several practical challenges, including resource constraints and cultural barriers. Organisations often face difficulties in allocating sufficient time, budget, and expertise necessary for thorough assessments. Additionally, cultural barriers, such as resistance to change or a lack of understanding of AI technologies, can hinder the assessment process.

Addressing Resource Constraints and Cultural Barriers

To address resource constraints, organisations can prioritise assessments for AI systems with the highest potential impact, ensuring efficient use of available resources. Leveraging external expertise through consultants or partnerships can also supplement internal capabilities. Overcoming cultural barriers requires fostering an organisational culture that values ethical considerations and transparency in AI deployment. This can be achieved through education and training programmes that raise awareness of the importance of AI impact assessments.

The Role of Effective Communication

Effective communication is crucial in overcoming these challenges. Clearly articulating the purpose, process, and benefits of AI impact assessments to all stakeholders can help mitigate resistance and foster collaboration. Regular updates and open channels for feedback encourage stakeholder engagement and contribute to a more inclusive assessment process.

Facilitating Smoother Assessments with Phased Implementation Strategies

Phased implementation strategies can significantly facilitate smoother AI impact assessments. By breaking down the assessment process into manageable stages, organisations can focus on immediate priorities while gradually expanding their assessment capabilities. This approach allows for iterative learning and adjustments, ensuring that the assessment process evolves in line with organisational needs and technological advancements.

At ISMS.online, we understand these challenges and offer solutions to streamline your AI impact assessment process. Our platform provides tools and resources to manage resource constraints, overcome cultural barriers, enhance communication, and implement phased strategies effectively.

ISO 42001 Annex A Controls

| ISO 42001 Annex A Control | ISO 42001 Annex A Control Name |

|---|---|

| ISO 42001 Annex A Control A.2 | Policies Related to AI |

| ISO 42001 Annex A Control A.3 | Internal Organization |

| ISO 42001 Annex A Control A.4 | Resources for AI Systems |

| ISO 42001 Annex A Control A.5 | Assessing Impacts of AI Systems |

| ISO 42001 Annex A Control A.6 | AI System Life Cycle |

| ISO 42001 Annex A Control A.7 | Data for AI Systems |

| ISO 42001 Annex A Control A.8 | Information for Interested Parties of AI Systems |

| ISO 42001 Annex A Control A.9 | Use of AI Systems |

| ISO 42001 Annex A Control A.10 | Third-Party and Customer Relationships |

How ISMS.online Can Support Your Organisation

ISMS.online offers a robust suite of tools and features that facilitate every stage of the AI impact assessment process. From initial planning and documentation to stakeholder engagement and reporting, our platform simplifies the management of comprehensive assessments. We provide a centralised location for all your assessment activities, making it easier to maintain oversight and ensure thoroughness.

Resources and Expertise Offered to Compliance Officers

For compliance officers, ISMS.online is an invaluable resource. We offer detailed guidance on the latest regulations and standards, including ISO 42001, ensuring you’re always up to date. Our platform also includes templates and checklists to streamline the assessment process, alongside analytics tools to monitor compliance status in real-time.

Enhancing AI Governance and Compliance

Partnering with ISMS.online enhances your organisation’s AI governance and compliance by providing a structured framework for impact assessments. This structured approach ensures that all potential impacts are considered and addressed, fostering a culture of responsibility and ethical AI use.

Why ISMS.online is the Preferred Solution

ISMS.online is the preferred solution for managing AI impact assessment processes due to its comprehensive features, ease of use, and focus on compliance and governance. Our platform is designed to adapt to your organisation's specific needs, offering scalable solutions that grow with you. By choosing ISMS.online, you're not just adopting a tool; you're partnering with experts dedicated to supporting your success in AI governance and compliance.

Book a demo