Understanding ISO 42001 Annex A Control A.2 – Policies Related to AI

ISO 42001 Annex A Control A.2 serves a pivotal role in the governance of Artificial Intelligence (AI) within organisations. It outlines the necessity for a well-documented AI policy that aligns with business objectives and ethical considerations in AI management. This control ensures that organisations implement AI systems responsibly, addressing ethical, legal, and societal concerns.

Purpose of ISO 42001 Annex A Control A.2 in AI Management

The primary purpose of Annex A Control A.2 is to provide a structured approach to AI governance. It emphasises the importance of establishing a comprehensive AI policy that guides the development, deployment, and use of AI systems. This policy acts as a cornerstone for responsible AI governance, ensuring that AI technologies are used in a manner that is ethical, transparent, and aligned with the organisation’s values and objectives.

Ensuring Responsible AI Governance

Responsible AI governance under Annex A Control A.2 is achieved through the formulation of an AI policy that encompasses ethical considerations, bias mitigation, privacy, safety, and compliance with legal and regulatory frameworks. This policy serves as a framework for decision-making and operations related to AI, promoting accountability and transparency in AI systems.

Key Components of an Effective AI Policy under ISO 42001

An effective AI policy under ISO 42001 includes:

- Ethical Principles: Guidelines that ensure AI technologies are developed and used ethically.

- Compliance Measures: Adherence to legal and regulatory requirements.

- Risk Management Strategies: Identification, assessment, and mitigation of AI-related risks.

- Transparency and Accountability Mechanisms: Clear documentation and reporting structures for AI decisions and processes.

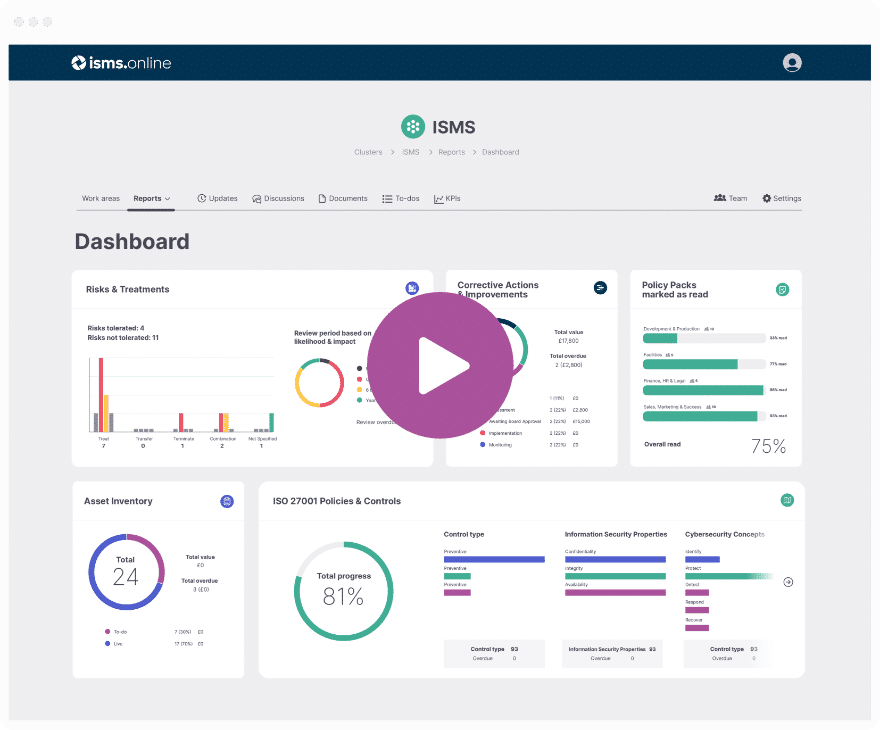

How We Facilitate Compliance with Annex A Control A.2

At ISMS.online, we provide an integrated platform that simplifies the development, documentation, and management of AI policies in compliance with ISO 42001. Our platform offers tools for risk assessment, policy documentation, and stakeholder engagement, making it easier for organisations to align their AI governance practices with the requirements of Annex A Control A.2. Through our services, organisations can ensure their AI policies are comprehensive, up-to-date, and effectively implemented, fostering responsible AI governance.

Book a demoDocumenting an AI Policy – A.2.2

Requirements for Documenting an AI Policy

An AI policy must be a comprehensive document that outlines the organisation’s approach to developing or using AI systems. It should reflect the organisation’s business strategy, values, and the level of risk it is willing to accept. The policy must address legal requirements, the risk environment, and the impact on interested parties. It is essential that this policy includes principles guiding AI activities and processes for handling deviations and exceptions.

Incorporating Principles Guiding AI Activities

The AI policy should articulate principles that guide all activities related to AI within the organisation. These principles must align with organisational values and culture, ensuring that AI systems are developed and used responsibly. The policy should also detail processes for managing deviations from these principles, ensuring a clear path for addressing exceptions.

Establishing Processes for Handling Deviations and Exceptions

A robust AI policy outlines clear processes for handling deviations from established principles and exceptions to policy guidelines. This includes mechanisms for reporting deviations, evaluating their impact, and implementing corrective actions. Such processes ensure that the organisation can maintain compliance with its AI policy and adapt to unforeseen challenges.

Streamlining the Documentation Process with ISMS.online

At ISMS.online, we understand the complexities involved in documenting an AI policy. Our platform offers tools and resources to streamline this process, making it easier for organisations to develop, implement, and manage their AI policies. With ISMS.online, you can ensure that your AI policy is comprehensive, compliant, and aligned with ISO 42001 Annex A Control A.2 requirements.

Everything you need

for ISO 42001

Manage and maintain your ISO 42001 Artificial Intelligence Management System with ISMS.online

Book a demoAligning AI Policy with Organisational Policies – A.2.3

Ensuring AI Policy Complements Organisational Goals

An effective AI policy does not exist in isolation; it must align with your organisation’s broader objectives and existing policies. This alignment ensures that AI initiatives support and enhance overall business strategies rather than contradicting or undermining them. Considerations for alignment include evaluating how AI can drive business goals, adhere to organisational values, and integrate with existing operational, security, and privacy policies.

Assessing the Impact of AI Systems on Existing Policies

To ensure that your AI policy complements existing policies, a thorough assessment of the impact of AI systems on these policies is crucial. This involves identifying areas where AI initiatives intersect with domains such as quality management, data protection, and employee welfare. By understanding these intersections, you can update existing policies or create provisions within your AI policy to address these overlaps effectively.

Leveraging ISMS.online for Policy Alignment

At ISMS.online, we provide tools and frameworks that facilitate the alignment of your AI policy with organisational objectives and existing policies. Our platform enables you to map out how AI initiatives align with your business strategy, assess the impact on existing policies, and ensure that your AI policy is integrated seamlessly into your organisational ecosystem. With ISMS.online, you can maintain coherence across all policy domains, ensuring that your AI initiatives are both effective and compliant.

Review of the AI Policy – A.2.4

Triggers for Reviewing the AI Policy

The need to review your AI policy can be triggered by several factors, including changes in legal requirements, technological advancements, shifts in organisational goals, or the emergence of new risks associated with AI systems. Additionally, feedback from stakeholders or lessons learned from the implementation of the AI policy may also necessitate a review to ensure its continued relevance and effectiveness.

Frequency of AI Policy Review

It is recommended that the AI policy be reviewed at planned intervals, typically annually, to assess its adequacy and effectiveness. However, it may also be prudent to conduct additional reviews in response to significant changes in the operating environment, legal landscape, or in the aftermath of a security incident involving AI systems.

Management’s Role in the Review Process

Management plays a crucial role in the review and approval process of the AI policy. This includes ensuring that the policy remains aligned with the organisation’s strategic objectives, approving changes to the policy, and endorsing the allocation of resources necessary for the implementation of any amendments. Management’s commitment to the review process is essential for maintaining the policy’s relevance and effectiveness.

Leveraging ISMS.online for Efficient Policy Review

At ISMS.online, we provide a comprehensive platform that simplifies the management of the AI policy review cycle. Our tools enable you to schedule reviews, track changes, and document management approvals efficiently. With ISMS.online, you can ensure that your AI policy remains up-to-date, compliant, and aligned with both internal and external requirements, thereby enhancing your organisation’s AI governance framework.

Manage all your compliance in one place

ISMS.online supports over 100 standards

and regulations, giving you a single

platform for all your compliance needs.

Legal and Regulatory Considerations in AI Policy Development

Influence of Legal Requirements on AI Policies

Legal requirements significantly shape the development of AI policies. They ensure that AI systems are developed, deployed, and used in a manner that complies with existing laws and regulations. This includes considerations for data protection, privacy, intellectual property rights, and non-discrimination. Adhering to these legal standards is crucial for mitigating risks and ensuring ethical AI use.

Key Legal and Regulatory Frameworks

Several key legal and regulatory frameworks must be considered when developing an AI policy. These include the General Data Protection Regulation (GDPR) for data privacy and protection, the EU AI Act for setting standardised trustworthiness criteria, and various national laws that regulate AI deployment and use. Familiarity with these frameworks is essential for compliance officers to ensure that AI policies align with legal standards.

Ensuring Compliance with Applicable Laws

To ensure AI policy compliance with applicable laws, organisations should conduct thorough legal assessments. This involves identifying relevant legal requirements and integrating them into the AI policy. Regular updates and reviews of the policy are necessary to adapt to evolving legal landscapes. At ISMS.online, we provide tools and resources to help you navigate these complexities, ensuring your AI policy remains compliant.

Challenges in Aligning AI Policies with Legal Standards

Compliance officers face challenges in keeping pace with rapidly evolving AI technologies and corresponding legal standards. Balancing innovation with compliance, interpreting ambiguous legal requirements, and ensuring cross-jurisdictional compliance are significant hurdles. Effective communication with legal experts and continuous education on AI and legal developments are strategies to overcome these challenges.

Risk Management Strategies within AI Policies

Addressing Risk Management in AI Policies

AI policies must incorporate a structured approach to risk management, identifying potential risks associated with AI systems and outlining strategies for their mitigation. This involves assessing risks across various dimensions, including ethical considerations, data privacy, security vulnerabilities, and potential biases. By prioritising risk management, organisations can ensure that AI systems are developed and deployed responsibly, minimising adverse impacts on individuals and society.

Systematic Approach to Identifying and Mitigating AI Risks

A systematic approach to risk management involves several key steps: risk identification, risk analysis, risk evaluation, and risk treatment. Initially, potential risks must be identified through a comprehensive assessment of the AI system’s design, development, and deployment phases. Following identification, risks are analysed to understand their potential impact and likelihood. This analysis informs the prioritisation of risks, guiding organisations in focusing their mitigation efforts where they are most needed. Finally, appropriate risk treatment strategies are selected and implemented, ranging from risk avoidance to risk acceptance, with continuous monitoring to assess the effectiveness of these measures.

Prioritising Risks in AI Policy

Prioritising risks within an AI policy requires a balanced approach that considers both the severity of potential impacts and the likelihood of their occurrence. High-impact risks that could result in significant harm to individuals or have legal implications should be addressed as a priority. This prioritisation ensures that resources are allocated effectively, focusing on mitigating the most critical risks first.

Tools Offered by ISMS.online for Effective AI Risk Management

At ISMS.online, we provide a suite of tools designed to support effective AI risk management. Our platform facilitates the entire risk management process, from risk identification and analysis to treatment and monitoring. With features such as risk assessment templates, risk registers, and dynamic reporting capabilities, ISMS.online enables organisations to implement robust risk management strategies within their AI policies. By leveraging our tools, you can ensure that your organisation’s approach to AI risk management is comprehensive, systematic, and aligned with best practices.

Everything you need

for ISO 42001

Manage and maintain your ISO 42001 Artificial Intelligence Management System with ISMS.online

Book a demoTransparency and Accountability in AI Systems

Transparency and accountability are foundational pillars in the governance of AI systems. They not only foster trust among users and stakeholders but also ensure that AI systems are used ethically and responsibly. At ISMS.online, we recognise the importance of these principles and offer guidance on implementing mechanisms to uphold them.

Mechanisms for Transparency

To ensure AI systems are transparent, organisations should adopt clear documentation practices that describe the AI system’s design, development, and deployment processes. This includes providing accessible explanations of the algorithms used, data sources, and decision-making processes. Additionally, implementing audit trails that record decisions made by AI systems can further enhance transparency.

Establishing Clear Accountability for AI Decisions

Accountability in AI systems requires clear delineation of roles and responsibilities among those involved in the AI system’s lifecycle. This involves assigning responsibility for the outcomes of AI decisions to specific individuals or teams and ensuring that there are processes in place for addressing any issues or grievances that arise from AI decisions.

The Role of Documentation

Documentation plays a crucial role in promoting transparency and accountability. Comprehensive documentation of AI systems, including their design, data inputs, and decision-making processes, provides a basis for understanding and evaluating these systems. It also serves as a reference point for accountability, enabling organisations to identify and address any issues effectively.

Balancing Transparency and Operational Efficiency

Balancing transparency and accountability with operational efficiency requires a strategic approach. Organisations should aim to integrate transparency and accountability measures into their AI systems in a way that does not impede their functionality or performance. This can be achieved by leveraging technologies and practices that automate documentation and audit trails, thereby minimising the additional workload on teams.

At ISMS.online, we are committed to helping you navigate the complexities of implementing transparency and accountability in your AI systems. Our platform offers tools and resources designed to streamline these processes, ensuring that your organisation can achieve compliance with ISO 42001 Annex A Control A.2 while maintaining operational efficiency.

Further Reading

Addressing Privacy and Safety Concerns in AI Policies

Tackling Privacy and Data Protection Issues

AI policies must prioritise privacy and data protection to safeguard user information effectively. This involves adhering to legal frameworks such as the General Data Protection Regulation (GDPR) and implementing data minimization principles. Ensuring that data used by AI systems is anonymized or pseudonymized where possible can significantly reduce privacy risks. Additionally, conducting regular privacy impact assessments helps identify potential vulnerabilities and mitigate them proactively.

Essential Safety Measures for AI Systems

Safety measures are paramount to prevent harm from AI systems. This includes implementing robust security protocols to protect against unauthorised access and ensuring AI systems are resilient against attacks. Regular security audits and penetration testing can help identify and address vulnerabilities. Moreover, establishing clear guidelines for human oversight of AI decisions is crucial to intervene if AI systems behave unpredictably or make harmful decisions.

Safeguarding User Data

To safeguard user data, organisations should employ encryption for data at rest and in transit. Access controls and authentication mechanisms must be stringent, ensuring only authorised personnel can access sensitive information. Transparent data handling practices, coupled with user consent for data collection and use, further enhance trust and compliance.

Best Practices for Enhancing Privacy and Safety

Adopting a privacy-by-design approach ensures that privacy and safety considerations are integrated at every stage of AI system development. Regular training for staff on data protection and privacy laws keeps everyone informed about their responsibilities. Collaboration with privacy and security experts can provide additional insights into best practices and emerging threats. At ISMS.online, we support organisations in implementing these best practices through comprehensive tools and resources, facilitating compliance with ISO 42001 Annex A Control A.2 and enhancing the privacy and safety of AI systems.

Bias Identification and Mitigation Strategies

Identifying Biases in AI Systems

To identify biases in AI systems, organisations can employ a variety of strategies, including conducting thorough audits of AI algorithms and datasets. Regularly reviewing and testing AI outputs against diverse data sets can also reveal hidden biases. At ISMS.online, we advocate for the use of automated tools that can systematically scan for and highlight potential biases within AI systems.

Facilitating Bias Mitigation Through AI Policies

AI policies play a crucial role in bias mitigation by establishing clear guidelines for the design, development, and deployment of AI systems. These policies should mandate regular bias audits, the use of diverse datasets in training AI, and the implementation of corrective measures when biases are detected. Incorporating accountability mechanisms within the policy ensures that bias mitigation is taken seriously across all levels of the organisation.

The Role of Data Quality and Diversity

Data quality and diversity are fundamental to bias mitigation. High-quality, diverse datasets ensure that AI systems are exposed to a wide range of scenarios and perspectives, reducing the risk of biassed outcomes. Organisations should prioritise the collection and use of datasets that accurately reflect the diversity of the real world.

Cultivating a Culture of Continuous Bias Awareness

Creating a culture of continuous bias awareness and correction involves educating staff about the importance of diversity and inclusion in AI development. Encouraging open discussions about biases and their impacts can foster an environment where everyone feels responsible for identifying and addressing biases. At ISMS.online, we support organisations in developing training programmes and resources that promote an ongoing commitment to bias mitigation.

Stakeholder Engagement and Impact Assessment

Engaging stakeholders in AI impact assessments is crucial for developing AI policies that are both effective and ethically sound. At ISMS.online, we emphasise the importance of incorporating diverse perspectives to ensure comprehensive assessments.

Effective Engagement of Stakeholders

To effectively engage stakeholders, it’s essential to identify all parties affected by AI systems, including end-users, employees, and external partners. Transparent communication and inclusive consultation processes can facilitate meaningful participation. Providing platforms for feedback and incorporating stakeholder insights into AI policy development are key strategies we advocate for.

Methodologies for Comprehensive AI System Impact Assessments

A variety of methodologies can be employed for AI system impact assessments. These include risk assessment frameworks that evaluate potential negative outcomes, ethical impact assessments focusing on moral implications, and privacy impact assessments examining data handling practices. Utilising a combination of these methodologies ensures a holistic evaluation of AI systems.

Informing AI Policy Development with Impact Assessment Results

The results from impact assessments are invaluable for informing AI policy development. They highlight areas requiring attention, such as ethical considerations, privacy concerns, and potential biases. By integrating these findings into AI policies, organisations can address risks proactively and align AI practices with ethical standards.

Balancing Stakeholder Interests and AI Innovation

Organisations often face challenges in balancing stakeholder interests with the drive for AI innovation. Prioritising transparency and ethical considerations while fostering innovation requires a delicate balance. At ISMS.online, we support organisations in navigating these challenges, ensuring that AI policies reflect a commitment to responsible AI development and use.

Continuous Learning and Improvement in AI Management

Fostering a Culture of Continuous Learning

For organisations to stay ahead in the rapidly evolving field of AI, fostering a culture of continuous learning is essential. This involves encouraging employees to stay informed about the latest AI developments, ethical considerations, and regulatory changes. At ISMS.online, we support this by providing access to a wealth of resources and training materials that cover the latest trends and best practices in AI management.

Mechanisms for Ongoing AI Policy Improvement

Implementing mechanisms for ongoing AI policy improvement is crucial for adapting to technological advancements and changing regulatory landscapes. This can include regular policy review cycles, incorporating feedback from AI system audits, and benchmarking against industry standards. Our platform facilitates these processes, making it easier for you to keep your AI policies up-to-date and effective.

Establishing Feedback Loops

Feedback loops are vital for informing AI policy updates. They can be established through stakeholder surveys, user feedback on AI system performance, and incident reporting mechanisms. This feedback provides valuable insights into areas for improvement and helps ensure that AI policies remain aligned with organisational objectives and ethical standards.

Supporting Continuous Improvement Processes with ISMS.online

At ISMS.online, we understand the importance of continuous improvement in AI management. Our platform offers tools and features designed to support your organisation’s continuous learning and improvement efforts. From policy management to stakeholder engagement tools, we provide the resources you need to ensure your AI management practices remain at the forefront of industry standards and regulatory compliance.

ISO 42001 Annex A Controls

| ISO 42001 Annex A Control | ISO 42001 Annex A Control Name |

|---|---|

| ISO 42001 Annex A Control A.2 | Policies Related to AI |

| ISO 42001 Annex A Control A.3 | Internal Organization |

| ISO 42001 Annex A Control A.4 | Resources for AI Systems |

| ISO 42001 Annex A Control A.5 | Assessing Impacts of AI Systems |

| ISO 42001 Annex A Control A.6 | AI System Life Cycle |

| ISO 42001 Annex A Control A.7 | Data for AI Systems |

| ISO 42001 Annex A Control A.8 | Information for Interested Parties of AI Systems |

| ISO 42001 Annex A Control A.9 | Use of AI Systems |

| ISO 42001 Annex A Control A.10 | Third-Party and Customer Relationships |

ISMS.online Assist in Achieving ISO 42001 Compliance

At ISMS.online, we understand the complexities involved in achieving compliance with ISO 42001, especially when it comes to the nuanced area of AI policy development and management. Our platform is designed to simplify this process, providing you with a comprehensive suite of tools and resources tailored to meet the specific requirements of ISO 42001.

Tools and Resources for Effective AI Policy Development

We offer a range of tools that facilitate the documentation, implementation, and management of AI policies. This includes customizable templates that align with ISO 42001 standards, workflow automation to streamline policy review and approval processes, and secure collaboration spaces for engaging with stakeholders. Our resources are designed to ensure that your AI policies are comprehensive, compliant, and effectively implemented.

Streamlining AI Management System Implementation

Partnering with ISMS.online can significantly streamline your AI management system implementation. Our platform integrates seamlessly with existing organisational systems, enabling you to manage AI policies, risk assessments, and compliance activities in one centralised location. This integration simplifies the management of complex AI systems, ensuring consistency and efficiency across all AI governance activities.

Choosing ISMS.online for AI Governance and Compliance Needs

Choosing ISMS.online for your AI governance and compliance needs means opting for a platform that combines ease of use with robust functionality. Our commitment to supporting organisations in navigating the challenges of AI governance and ISO 42001 compliance sets us apart. With ISMS.online, you're not just adopting a platform; you're gaining a partner dedicated to ensuring your success in the evolving landscape of AI management and governance.

Book a demo