Understanding ISO 42001 AI Policy Requirements

An AI policy under ISO 42001 serves as the cornerstone for guiding an organisation’s approach to AI system management, encapsulating the organisation’s commitment to ethical, secure, and transparent AI use. It ensures that AI technologies are leveraged responsibly and in alignment with both internal objectives and external regulations, setting the stage for management direction and providing a clear framework for decision-making and operational processes related to AI systems (Requirement 4.3).

Aligning AI Policies with ISO 42001

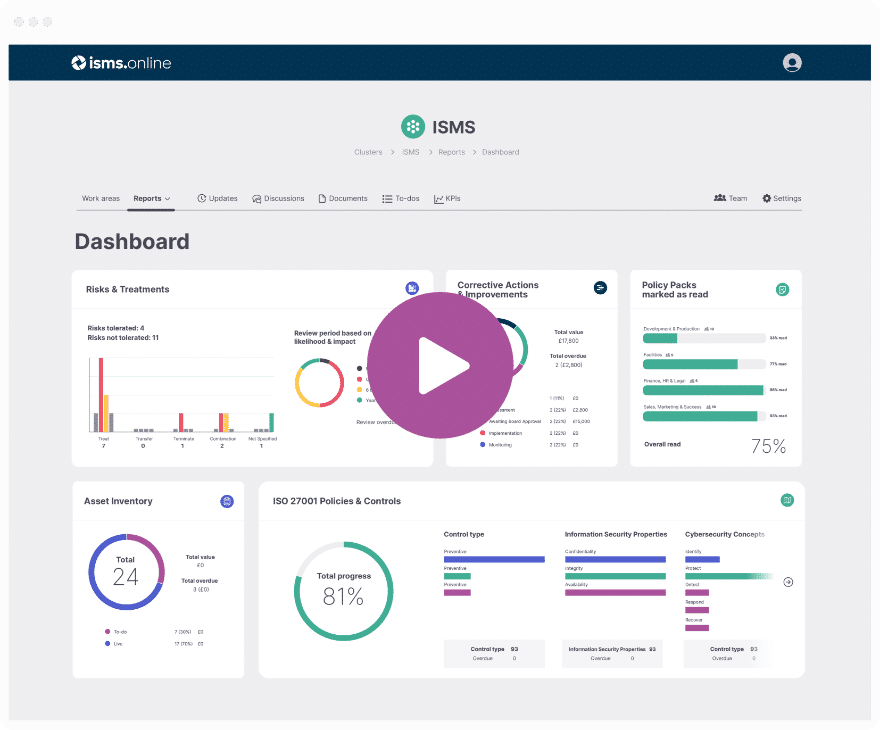

ISMS.online offers a robust platform to support organisations in developing and aligning their AI policies with the ISO 42001 standard. By leveraging the platform’s structured approach, organisations can ensure their AI policies are comprehensive, addressing all necessary components such as data governance (A.4.3), risk management Requirement 5.2, and ethical considerations (C.2.5). ISMS.online facilitates the integration of these policies into the broader AI management system, promoting consistency and adherence to global best practices (D.2).

Ensuring Ethical, Secure, and Transparent AI Use

The AI policy is crucial for establishing a governance structure that prioritises ethical considerations (C.2.5), data protection (A.7.4), and transparency (C.2.11). It outlines the principles and commitments that govern AI system development and use, ensuring that AI technologies are deployed in a manner that respects privacy (A.7.7), prevents bias (C.2.5), and maintains user trust (A.8.2). Through ISMS.online, organisations can document these principles, track compliance, and communicate the policy's importance across all levels of the organisation, fostering a culture of responsible AI use Requirement 5.1. This is in line with:

- Requirement 5.1: Communicating the importance of effective AI management and conforming to the AI management system requirements.

- A.3.2: Defining and allocating roles and responsibilities for AI within the organisation.

- B.3.2: Ensuring that roles and responsibilities for AI are defined and allocated according to the needs of the organisation.

- C.2.11: Ensuring transparency in AI system operations and providing explanations of important factors influencing system results.

- D.2: Aligning the AI management system with other relevant management systems, such as information security and privacy, to ensure a holistic approach to governance, risk, and compliance.

Crafting an AI Policy and Aligning Organisational Goals

An AI policy must be a reflection of your organisation’s overarching goals and values, acting as a compass to guide the development and deployment of AI technologies in a manner that aligns with the strategic direction and ethical standards of your organisation (Requirement 5.2). It should be established by top management and provide a framework for setting AI objectives that are consistent with the organisation’s strategic direction, including commitments to meet applicable requirements and to continual improvement of the AI management system (Requirement 5.2).

Reflecting the Organisation’s Purpose

Your AI policy should encapsulate the organisation’s mission, supporting its core objectives while fostering innovation and ethical AI use. It must resonate with the organisational ethos, ensuring that every AI initiative undertaken is a step towards fulfilling the broader organisational goals (Requirement 4.1). The organisation should determine external and internal issues relevant to its purpose and that affect its ability to achieve the intended result(s) of its AI management system (Requirement 4.1).

Framework for Setting AI Objectives

The framework for setting AI objectives, as outlined in ISO 42001, should be comprehensive, incorporating a commitment to continual improvement and adherence to applicable legal and regulatory requirements (Requirement 6.2). This framework should be flexible enough to adapt to the evolving AI landscape while remaining robust in its core principles. The organisation shall establish AI objectives at relevant functions and levels, ensuring they are measurable, monitored, communicated, and updated as appropriate (Requirement 6.2).

Demonstrating Commitment to Continual Improvement

Your AI policy should embody a commitment to continual improvement, showcasing a proactive approach to enhancing AI systems’ effectiveness and ethical standards (Requirement 10.1). This involves regular reviews and updates to the policy, ensuring it remains relevant and effective in managing AI risks and harnessing AI opportunities. The organisation shall continually improve the suitability, adequacy, and effectiveness of the AI management system (Requirement 10.1).

Ensuring Compliance with Applicable Requirements

To ensure compliance, your AI policy must be informed by relevant laws, standards, and industry best practices (A.2.2). It should provide clear directives on adhering to these requirements, establishing accountability and mechanisms for regular compliance checks. The organisation shall document a policy for the development or use of AI systems (A.2.2). The AI policy should be informed by business strategy, organisational values and culture, and the level of risk the organisation is willing to pursue or retain (B.2.2). The misuse or disclosure of personal and sensitive data can have harmful effects on data subjects, and the AI policy should address privacy considerations (C.2.7). The AI management system should be integrated with other relevant management systems, such as ISO/IEC 27001 for information security, to ensure a cohesive approach to compliance and risk management (D.2).

Utilising platforms like ISMS.online can streamline this process, offering tools that align with ISO 42001’s Annex A Controls, aiding in the effective management and continuous improvement of your AI systems.

Get an 81% headstart

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

Establishing Control Objectives in AI Policy

Control objectives form the backbone of an effective AI policy, ensuring that the management system is capable of achieving its intended outcomes. These objectives must be clearly defined, measurable, and aligned with the strategic goals of your organisation, as per Requirement 6.2. They serve as benchmarks for AI system performance and compliance, guiding the development and operation of AI systems within ethical and legal boundaries.

Documentation of AI Policy

According to A.2.2, the AI policy must be documented with precision, outlining the control objectives and the controls to achieve them. This documentation acts as a reference point for all AI-related activities and decisions, ensuring consistency and accountability across the organisation.

Alignment with Organisational Policies

Integrating the AI policy with other organisational policies, supported by A.2.3, is not just a matter of compliance but also of strategic coherence. It ensures that AI initiatives are in lockstep with the organisation’s values, risk appetite, and regulatory obligations, fostering a unified approach to governance and risk management.

Ensuring Policy Effectiveness and Relevance

To maintain the effectiveness and relevance of the AI policy, a process of regular review and updating is essential, as highlighted in A.2.4. This process must consider the dynamic nature of AI technologies and the evolving legal and ethical landscapes. By doing so, the AI policy remains a living document, responsive to new challenges and opportunities in the field of AI.

Implementation Guidance for AI Policies

Factors Informing AI Policy

When crafting an AI policy, it’s imperative to consider several pivotal factors:

-

Business Strategy: Aligning the AI policy with the organisation’s strategic objectives is crucial for leveraging AI to bolster overall success, as stipulated by Requirement 5.2.

-

Organisational Values and Culture: The AI policy must be a reflection of the organisation’s core values and culture, ensuring AI practices are in harmony with these foundational principles, in accordance with Requirement 5.2 and Requirement 5.3.

-

Risk Level of AI Systems: Tailoring the policy to address the specific risks associated with the AI systems in use, considering their complexity and impact, is essential, as highlighted by Requirement 5.2 and Requirement 5.3.

Importance of Topic-Specific Aspects

Incorporating topic-specific aspects such as AI resources and assets is essential for a comprehensive AI policy. These aspects ensure that the policy:

- Addresses the unique challenges and opportunities presented by AI technologies, as outlined in A.4.

- Provides clear guidelines for AI system impact assessments, in line with A.5.2.

- Guides the development and operation of AI systems in a manner that is consistent with the organisation’s risk profile and ethical standards, aligning with C.2.5 and C.2.11.

Ensuring Comprehensive Coverage

To ensure your AI policy is comprehensive, it should:

- Be informed by a thorough understanding of AI systems and their implications, as required by B.2.2.

- Include processes for regular updates to keep pace with technological advancements and regulatory changes, as called for in B.2.4.

- Be communicated effectively to all stakeholders to ensure understanding and compliance, aligning with the need for effective communication and integration of AI management practices across various domains and sectors, as emphasised in D.2.

Adhering to the guidance provided in Annex B, organisations can develop AI policies that are not only compliant with ISO 42001 but also facilitate responsible and effective AI management.

Compliance doesn't have to be complicated.

We've done the hard work for you, giving you an 81% Headstart from the moment you log on.

All you have to do is fill in the blanks.

Embedding Principles and Commitments in AI Policy

Guiding Principles for AI-Related Activities

Underpinning your AI policy with core principles is crucial, as these serve as the foundation for all AI-related activities within your organisation. As B.2.2 suggests, commitments to ethical use, fairness, transparency, and accountability are essential. They ensure AI systems are developed and used in a manner that respects human rights and adheres to high ethical standards, aligning with Requirement 5.2 for establishing an AI policy that reflects the organisation’s intentions and direction.

Addressing Deviations and Exceptions

Your AI policy must establish clear processes for handling deviations from and exceptions to the established principles, including mechanisms for reporting and managing incidents where AI systems may operate outside of expected parameters or ethical boundaries. This aligns with Requirement 5.5 for AI risk treatment and A.3.3 for reporting concerns, ensuring that incidents are managed effectively and in accordance with the AI management system’s established protocols.

Commitments to Responsible AI Development

Commitments to responsible AI development are integral to your AI policy. These commitments should reflect a dedication to continuous improvement and learning in AI system development, adherence to applicable laws and regulations, and engagement with stakeholders to understand and address societal impacts of AI. This approach is in line with Requirement 6.2 for establishing AI objectives and A.5 for assessing impacts of AI systems, ensuring that privacy (C.2.7), security (C.2.10), and transparency and explainability (C.2.11) are considered throughout the AI system’s lifecycle.

Integration of Principles with ISMS.online

ISMS.online facilitates the integration of these principles into your AI management system by providing a structured platform for documenting your AI policy and related commitments, tracking compliance with the policy’s principles, and managing deviations to ensure that corrective actions are taken when necessary. This comprehensive approach ensures that your AI policy is not only compliant with Requirement 7.5 for documented information but also actionable and aligned with your organisation’s commitment to responsible AI, leveraging the platform’s capabilities as outlined in D.2 for integrating the AI management system with other management system standards.

Effective Communication and Availability of AI Policy

Communicating the AI Policy Internally

To foster an organisation-wide understanding and commitment to responsible AI practices, it is pivotal to communicate the AI policy effectively. This involves formal channels to ensure every member comprehends their role in upholding the policy, potentially through training sessions, internal memos, or inclusion in the employee handbook, as per Requirement 7.4 and A.2.2. Regular reviews of the AI policy are essential to maintain its relevance and effectiveness, aligning with B.2.4.

Training and Competence

Training programmes are crucial in ensuring personnel are not only aware of the AI policy but also competent in its implementation, aligning with Requirement 7.2. Such programmes should be designed to address the unique challenges and opportunities presented by AI technologies, reflecting the organisational objectives and risk sources related to AI as outlined in Annex C.

Making the AI Policy Available to Interested Parties

For transparency and trust, the AI policy must be accessible to external stakeholders, which may involve publishing the policy on the organisation’s website or providing it upon request to regulators, customers, or partners, in accordance with Requirement 5.2 and A.8.5. This approach ensures that the AI policy is not only communicated internally but also externally, fostering an environment of openness and accountability.

External Communication Mechanisms

Specific mechanisms for external communication of the AI policy should be clarified, providing avenues for feedback, inquiries, and engagement with external stakeholders, as suggested by A.8.5. This could include dedicated communication channels or interactive platforms that facilitate stakeholder participation and dialogue.

The Crucial Role of Top Management

Top management must champion the AI policy, underscoring its importance through clear communication and endorsement, as per Requirement 5.1. Their leadership is essential in embedding the policy’s principles into the organisational culture and practices, establishing accountability within the organisation as highlighted in B.3.1.

Streamlining Policy Communication with ISMS.online

Organisations can leverage platforms like ISMS.online to streamline the communication process. The platform offers tools to distribute the AI policy efficiently across various departments, track acknowledgment by employees, and update stakeholders on revisions in a timely manner, ensuring integration into daily operations and decision-making processes across the organisation, as supported by B.2.2 and B.7.5.

Integration with Other Management Systems

The AI policy should be integrated with other management systems within the organisation, demonstrating how the AI policy complements the organisation’s information security, privacy, and quality management systems, as suggested by D.2. ISMS.online’s modular architecture and Mapping & Linking features enable seamless integration of the AI management system with other domain-specific or sector-specific management systems, ensuring a cohesive approach to AI governance.

Management Review and Continual Improvement

Top management’s review of the AI policy contributes to its continual improvement, including setting performance indicators and tracking progress against AI objectives, as per Requirement 9.3. This process is critical for ensuring that the AI policy remains dynamic, responsive to changes in the AI landscape, and integrated into the organisation’s broader risk management and governance practices.

Manage all your compliance in one place

ISMS.online supports over 100 standards

and regulations, giving you a single

platform for all your compliance needs.

Further Reading

Integrating AI Policy with Other Management Systems

Integrating an AI policy with established management systems like ISO/IEC 27001 for information security or ISO 9001 for quality management can enhance the robustness of organisational governance. This integration ensures that AI practices are consistent with other critical operational areas, providing a unified approach to risk management and process optimization.

Benefits of an Integrated Management System Approach

An integrated management system approach offers several benefits:

- Streamlined Processes: It reduces redundancy and increases efficiency by harmonising procedures and standards across different management areas, as outlined in Requirement 4.4.

- Consistent Objectives: It aligns the objectives of AI systems with broader organisational goals, ensuring a cohesive strategy, in line with Requirement 6.2.

- Enhanced Compliance: It simplifies compliance with multiple standards, providing a clear, comprehensive governance structure, supported by Requirement 9.3.

Guidance from Annex D.2 for Policy Integration

Annex D.2 of ISO 42001 provides guidance on integrating AI policies with other management systems. It emphasises the importance of consistency in controls and procedures, ensuring that AI management complements other areas such as privacy, quality, and security, as per Requirement 4.3 and Requirement 5.2.

Support from ISMS.online for Cross-System Integration

ISMS.online supports the integration of AI policy across systems by offering:

- Centralised Documentation: A single repository for all policy documents, facilitating easy access and management, aligning with A.7.4 and B.7.5.

- Control Mapping: Tools to map AI policy controls to those of other standards, ensuring comprehensive coverage, as recommended by A.5.5 and B.5.3.

- Collaborative Workspaces: Platforms for cross-departmental collaboration, enabling the harmonisation of AI policy with other management areas, in accordance with A.3.3 and B.3.2.

By utilising ISMS.online, organisations can ensure that their AI policy is not only aligned with ISO 42001 but also integrated seamlessly with other management system standards, fostering a holistic approach to governance and compliance, as suggested by Annex A and Annex B.

Addressing Global Interoperability and Compliance

Global interoperability is essential for AI policies, ensuring AI systems can operate across different regions and comply with various international standards. ISO 42001 provides a universally recognised framework, aligning with global best practices for AI management systems (Requirement 1).

Facilitating Compliance with International Standards

ISO 42001’s approach to AI policy includes provisions for ethical use (A.2.2), data protection (A.7.4), and transparency (A.8.2), common tenets in international regulations. This alignment facilitates compliance with international standards, reducing complexity for organisations operating globally.

- Understanding the organisation and its context Requirement (4.1) is crucial, as it involves considering external and internal issues relevant to the organisation’s purpose and its AI systems, including the international regulatory environment.

- Understanding the needs and expectations of interested parties Requirement (4.2) reflects the need to align AI policies with the requirements of international standards, which are part of the interested parties’ expectations.

- Considering risks and opportunities Requirement (5.2) that need to be addressed ensures the AI management system can achieve its intended outcomes, including compliance with international standards.

- The mention of data protection aligns with the control regarding the acquisition and management of data resources (A.7.3).

- The focus on ethical use, data protection, and transparency aligns with the implementation guidance for documenting an AI policy that considers business strategy and legal requirements (B.2.2).

Challenges in Achieving Global Interoperability

Organisations often encounter challenges such as:

- Diverse regulatory environments across countries (C.3.1).

- Varied ethical standards and cultural expectations (C.3.2).

- Technological compatibility issues.

Leveraging ISMS.online for Global Compliance

ISMS.online offers tools and features that support organisations in meeting ISO 42001 requirements, including:

- Centralised Control Management: Aligning AI policies with Annex A Controls of ISO 42001 for consistent application across jurisdictions (A.4.2).

- Documented Information: Maintaining records that demonstrate compliance with international standards Requirement (7.5).

- Risk Assessment and Treatment: Addressing global risks and ensuring AI systems are resilient and compliant in different markets Requirement (5.5).

By utilising ISMS.online, organisations can navigate the complexities of global AI regulations, ensuring their AI policies are robust, compliant, and capable of supporting international operations.

- The text aligns with the control that requires documenting relevant resources, which includes centralised control management features of ISMS.online (A.4.2).

- The text supports the requirement for maintaining documented information, which ISMS.online facilitates through its features Requirement (7.5).

- The text aligns with the requirement to define a risk treatment process, which ISMS.online supports with its risk assessment and treatment tools Requirement (5.5).

- The text reflects the use of ISMS.online to integrate AI management practices with other international standards, supporting global compliance across different domains and sectors (D.2).

Regular Review and Update of AI Policy

Necessity of Regular AI Policy Review

Ensuring the AI policy remains effective and relevant requires regular reviews, particularly in the rapidly evolving landscape of AI technologies and regulatory changes. These reviews are crucial for assessing the policy’s efficacy, pinpointing improvement areas, and updating the policy to reflect new insights or external changes, as mandated by Requirement 5.2 and A.2.4.

Frequency of AI Policy Updates

While ISO 42001 does not specify a timeline for policy updates, it is generally advised to review the AI policy annually or when significant changes in AI technology, business objectives, or regulatory requirements occur, in line with the guidance provided by Requirement 9.3.

Triggers for AI Policy Review

The AI policy should be reviewed in response to triggers such as:

- Technological Advances: The introduction of new AI technologies or methodologies, which can affect the organisation’s ability to achieve the intended outcomes of its AI management system as outlined in Requirement 4.1.

- Regulatory Changes: Updates in AI-related laws or standards, which may necessitate changes to the AI management system, including the AI policy as per Requirement 6.1.

- Operational Shifts: Changes in organisational strategy or the AI systems’ operational context, which can increase the complexity of the environment as described in C.3.1 and impact transparency and explainability requirements as noted in C.3.2.

Streamlining the Review Process with ISMS.online

ISMS.online can significantly streamline the review and update process by providing:

- Centralised Documentation: A single repository for all policy documents, simplifying the review and update process in accordance with Requirement 7.5.

- Automated Reminders: Notifications for timely AI policy reviews, ensuring compliance with A.2.4.

- Version Control: Tracking of all changes to maintain the most current version of the policy, supporting the effective documentation of the AI policy as suggested by B.2.2.

Utilising ISMS.online helps maintain a dynamic and responsive AI policy that meets ISO 42001 requirements and supports responsible AI management, demonstrating the platform’s capability to integrate the AI management system with other management systems and adapt to different domains or sectors as highlighted in D.1.

Preparing for AI Policy Audits and Certifications

Required Documentation for AI Policy Audits

Organisations embarking on AI policy audits must compile a robust set of documents that demonstrate adherence to Requirement 7.5 of ISO 42001 standards. This set includes the AI policy itself, which should be established as per A.2.2, records of risk assessments that align with A.6.7, evidence of compliance with legal and regulatory requirements, and thorough documentation of AI system processes and outputs, ensuring data quality as outlined in B.7.4.

Strategies for ISO 42001 Certification Audits

Organisations preparing for ISO 42001 certification audits should conduct internal audits in accordance with Requirement 9.2 to identify and rectify non-conformities. It is crucial to engage in regular reviews and updates of the AI policy, as recommended by B.2.4, and to ensure all personnel are trained and aware of their roles in compliance with the AI policy, thereby fulfilling the competence requirements of Requirement 7.2.

Avoiding Common Pitfalls in AI Policy Audits

To avoid common pitfalls in AI policy audits, organisations should maintain clear and accessible documentation, which is a key aspect of Requirement 7.5. Establishing a culture of continuous improvement addresses the essence of Requirement 10.1, and ensuring transparent communication channels for reporting and addressing AI-related issues aligns with Requirement 7.4 and the transparency and explainability principles of C.2.11.

Audit Preparation and Management with ISMS.online

ISMS.online facilitates audit preparation and management by offering a centralised platform for managing all required documentation, in line with Requirement 7.5. The platform provides tools for tracking compliance and identifying gaps in the AI management system, which is essential for the internal audit process as per Requirement 9.2. Features that facilitate internal and external audits, including scheduling, action tracking, and reporting, help organisations streamline their audit preparation process, ensuring that they are well-equipped to achieve and maintain ISO 42001 certification. The integration of the AI management system with other management systems, as suggested in D.2, and ensuring AI systems are used according to their intended purposes, as per B.9.4, are also supported by ISMS.online.

Key Takeaways for Compliance Officers on AI Policy

Benefits of a Well-Crafted AI Policy

- A robust AI policy, when aligned with Requirement 5.2, serves as a strategic asset, enhancing the organisation’s reputation and trustworthiness.

- It provides a clear roadmap for ethical AI development, use, and management, safeguarding against risks and aligning with global standards, as outlined in A.5.3 and A.9.3.

Imperative of Ongoing Policy Development and Adaptation

- The AI landscape is dynamic, with continuous advancements and changing regulations. An AI policy must, therefore, be adaptable and subject to regular review to remain relevant and effective, in accordance with Requirement 4.1 and Requirement 6.3.

- Ongoing development ensures that the AI policy evolves in tandem with new technologies, methodologies, and regulatory changes, maintaining the organisation's compliance and competitive edge, as emphasised by C.3.4 and C.3.7.

For expert guidance on developing and maintaining an AI policy that meets the rigorous standards of ISO 42001, organisations are encouraged to reach out to ISMS.online. Our comprehensive suite of tools and resources is designed to streamline the process of AI policy management, ensuring that your organisation remains at the forefront of responsible AI governance, in line with D.2.

Book a demo